WEBRTC technology: audio and video chat in the browser. WebrTC, audio and video chat directly in the browser without any Web application application with WeBRTC

WebRTC (Web Real Time Communications) is a standard that describes the transmission of streaming audio data, video data and content from the browser and to the browser in real time without installing plug-ins or other extensions. The standard allows you to turn the browser into the terminal terminal of the video conferencing, just open the web page to start communicating.

What is WEBRTC?

In this article we will look at everything you need to know about wEBRTC technology For a regular user. Consider the advantages and disadvantages of the project, reveal some secrets, we will tell you how it works, where and what the WebrTC is applied.

What you need to know about webrTC?

Evolution of Standards and Technologies Video Communications

Sergey Yutzaytis, Cisco, video + conference 2016How WEBRTC works

On the client side

- The user opens a page containing HTML5 tag

- The browser requests access to a webcam and user microphone.

- JavaScript code on the user page controls the connection parameters (IP addresses and WEBRTC server or other WEBRTC customers) to bypass NAT and Firewall.

- When receiving information about the interlocutor or a stream with the conference, the browser begins to match the audio and video codecs used.

- The process of encoding and transmitting streaming data between WebrTC clients (in our case, between the browser and the server) begins.

On the side of the WebrtC server

For the exchange of data between the two participants, the video server is not required, but if you need to combine several participants in one conference, the server is necessary.

The video server will receive media traffic from various sources, convert it and send it to users who use WeBRTC as a terminal.

The WebrTC server will also receive media traffic from Webrtc Peters and transfer to its conference participants who use applications for desktop computers or mobile devices, in the case of any.

Advantages of Standard

- No installation is required.

- Very high quality communication due to:

- Using modern video (VP8, H.264) and audio codecs (OPUS).

- Automatic adjustment of the quality of the stream under the condition of the connection.

- Built-in echo and noise reduction system.

- Automatic adjustment of the sensitivity of the microphones of participants (ARU).

- High security: All connections are protected and encrypted according to TLS and SRTP protocols.

- There is a built-in content capture mechanism, such as desktop.

- The ability to implement any HTML5 and JavaScript management interface.

- Ability to integrate an interface with any back-end systems using WebSockets.

- An open source project - can be implemented in your product or service.

- Real cross-platform: the same WebrTC application will work equally well on any operating system, desktop or mobile, provided that the browser supports WEBRTC. This significantly saves resources for the development of software.

Disadvantages of standard

- For the organization of group audio and video conferencing, the VKS server is required, which would mix the video and the sound from the participants, because The browser does not know how to synchronize several incoming flows among themselves.

- All webrTC solutions are incompatible, because The standard only describes video and sound transmission methods, leaving the implementation of subscriber addressing methods, tracking their availability, messaging and files, planning, and other vendor.

- In other words, you will not be able to call from WebrTC application of one developer in the Webrtc application of another developer.

- Mixing group conferences requires large computational resources, so this type of video link requires purchase paid subscription Either investing in its infrastructure, where 1 physical core of the modern processor is required for each conference.

WebRTC secrets: how vendors benefit from breakthrough web technology

Tsakhi Levent Levi, Bloggeek.me, video + conference 2015

WEBRTC for the market VKS

Increasing the number of VKS terminals

WEBRTC technology has had a strong impact on the development of the VKS market. After leaving the first browsers with WEBRTC support in 2013, the potential number of video conferencing terminals around the world immediately increased by 1 billion devices. In essence, each browser has become a CSM terminal, not inferior to its hardware analogues from the point of view of bidding.

Use in specialized solutions

Using various JavaScript libraries and WEBRTC cloud service API allows you to easily add video link support to any web projects. Previously, to transmit data in real-time developers, it was necessary to study the principles of the protocols and use the developments of other companies that most often demanded additional licensing, which increased costs. Already, WebrTC is actively used in the "Call site", "Online Chat Support", etc.

Ex-users Skype for Linux

In 2014, Microsoft announced the termination of the Skype project support for Linux, which caused great irritation from IT specialists. WEBRTC technology is not tied to the operating system, and implemented at the browser level, i.e. Linux users will be able to see the products and services based on Webrtc full-fledged Skype replacement.

Competition with Flash.

WEBRTC and HTML5 have become a fatal blow for Flash technology, which and so worried about not best years. Since 2017, leading browsers officially ceased to support Flash and technology finally disappeared from the market. But you need to give Flash due, after all it was he who created the web conference market and proposed technical opportunities for live communications in browsers.

Video presentation WEBRTC.

Dmitry Odintsov, TrueConf, Video + Conference October 2017

Codecs in WebrTc.

Audio codec

To compress audio traffic in WEBRTC, OPUS and G.711 codecs are used.

G.711. - The oldest voice codec with a high bit rate (64 kbps), which is most often used in systems traditional telephony. The main advantage is the minimum computational load due to the use of light compression algorithms. The codec is different low level Voice Signal Compression and does not make an additional audio delay during communication between users.

G.711 is supported by a large number of devices. Systems in which this codec is used is easier in use than those based on other audio codes (G.723, G.726, G.728, etc.). The quality of G.711 was evaluated 4.2 in MOS testing (an estimate within 4-5 is the highest and means good qualitysimilar to the quality of voice traffic transmission in ISDN and even higher).

Opus. - This codec with low coding delay (from 2.5 ms to 60 ms), support for variable bitrate and high compression level, which is ideal for transmitting streaming audio in networks with variable throughput. Opus - a hybrid solution combining best features SILK codecs (voice compression, elimination of human speech distortions) and CELT (audio data coding). The codec is in free access, developers who use it, do not need to pay the deductions to copyright holders. Compared to other audio codes, Opus, undoubtedly wins in many indicators. He eclipsed quite popular codecs with a low bitrate, such as MP3, VORBIS, AAC LC. Opus restores the most close to the original "picture" of sound than amr-WB and SPEEX. Behind this codec - the future, which is why WEBRTC technologies have included it in a mandatory range of supported audiostandarts.

Video codec

The selection of a video codec for WeBRTC took several years from developers, as a result, we decided to use H.264 and VP8. Almost all modern browsers support both codecs. Video conferencing servers for working with WeBRTC sufficiently support only one.

VP8. - Free video encoded with an open license, is distinguished by a high speed of decoding of the video stream and increased resistance to loss of frames. The codec is universal, it is easy to introduce into hardware platforms, so very often the developers of video conferencing systems use it in their products.

Paid video codec H.264. He became known much earlier than his fellow. This is a codec with a high degree of compression of the video stream while saving high Quality video. The high prevalence of this codec among video conferencing hardware systems involves its use in the WEBRTC standard.

Google and Mozilla are actively promoting the VP8 codec, and Microsoft, Apple and Cisco - H.264 (to ensure compatibility with traditional video conferencing systems). And here it is a very big problem for the developers of cloud webrTC solutions, because if in the conference all participants use one browser, the conference is enough to mix once with one codec, and if there are different browsers and there are Safari / Edge browsers, then the conference will have to be encoded twice Different codecs, which will double system requirements To the media server and as a result, the cost of subscriptions to WebrTC services.

Webrtc API.

WEBRTC technology is based on three main APIs:

- (responsible for accepting a web browser audio and video signal from camera or user desktop).

- RTCPeerConnection (responsible for the connection between browsers for the "exchanging" received from the camera, microphone and desktop, mediadants. Also in the "duties" of this API enters the signal processing (cleaning it from outsiders, adjusting the microphone volume) and control over the audio and video codes used) .

- Rtcdata Channel (Provides bilateral data transmission through the established connection).

Before you access the microphone and user camera, the browser requests permission. IN Google Chrome. You can configure access in the "Settings" section in advance, in Opera and Firefox, the selection of devices is carried out directly at the time of access, from the drop-down list. The resolution request will always appear when using the HTTP protocol and once if you use HTTPS:

RTCPeerConnection. Each browser participating in the WEBRTC conference should have access to this object. Thanks to the use of RTCPeerConnection, mediaden from one browser can be used even through NAT and network screens. To successfully transfer media portions, participants should exchange the following data using transport, such as web sockets:

- the initiator participant sends the second participant of the Offer-SDP (data structure, with the characteristics of the media flow, which it will transmit);

- the second participant forms the "answer" - Answer-SDP and forwards it to the initiator;

- then the exchange of Ice candidates is organized between the participants, if any (if participants are behind NAT or network screens).

After the successful completion of this exchange between the participants, the transfer of media performances (audio and video) is organized directly.

Rtcdata Channel. Data Channel support appeared in browsers relatively recently, therefore, this API can be viewed solely in cases of using WEBRTC in browsers Mozilla Firefox. 22+ and Google Chrome 26+. With it, participants can share text messages In the browser.

Connection by WebrTc.

Supported desktop browsers

- Google Chrome (17+) and all browsers based on the Chromium engine;

- Mozilla Firefox (18+);

- Opera (12+);

- Safari (11+);

Supported Mobile Browsers for Android

- Google Chrome (28+);

- Mozilla Firefox (24+);

- Opera Mobile (12+);

- Safari (11+).

WebrTc, Microsoft and Internet Explorer

For a very long time Microsoft kept silence about WEBRTC support in Internet Explorer. And in your new browser Edge.. The guys from Redmond do not really like to give the technologies to users that they do not control, this is such a policy. But gradually the case has shifted from the dead point, because Webrtc further was impossible to ignore, and the ORTC project derived from the WEBRTC standard was announced.

According to the developers, ORTC is an extension of the WEBRTC standard with an improved set of JavaScript-based API and HTML5, which translated into a normal language means that everything will be the same, only to control the standard and its development will be Microsoft, and not Google. The set of codecs is expanded by support for H.264 and some audio codes of the G.7XX series used in telephony and hardware VKS systems. It may appear built-in support for RDP (for the transmission of content) and messaging. By the way, Internet Explorer users are not lucky, ORTC support will be only in EDGE. Well, naturally, such a set of protocols and codecs small blood Enurs with Skype for Business, which opens even more business applications for WEBRTC.

Today, WEBRTC is a hot technology for streaming audio and video in browsers. Conservative technologies, such as HTTP Streaming and Flash, are more suitable for distributing recorded content (Video on Demand) and significantly inferior to WeBRTC in terms of Realtime and online broadcasts, i.e. Where the minimum video delay is required, allowing the audience to see what is happening "live."

The possibility of high-quality communication in real time is derived from the WEBRTC architecture itself, where the UDP protocol is used to transport video streams, which is the standard basis for transmitting video with minimal delays and widely used in real-time communication systems.

Communication delay is important in online broadcasting systems, webinars and other applications where interactive communication with the source of video, end users is required and requires a solution.

Another weighty reason to try WEBRTC is definitely a trend. Today, every Android Chrome browser supports this technology, which guarantees millions of devices ready for viewing the broadcast without installing any additional software and configurations.

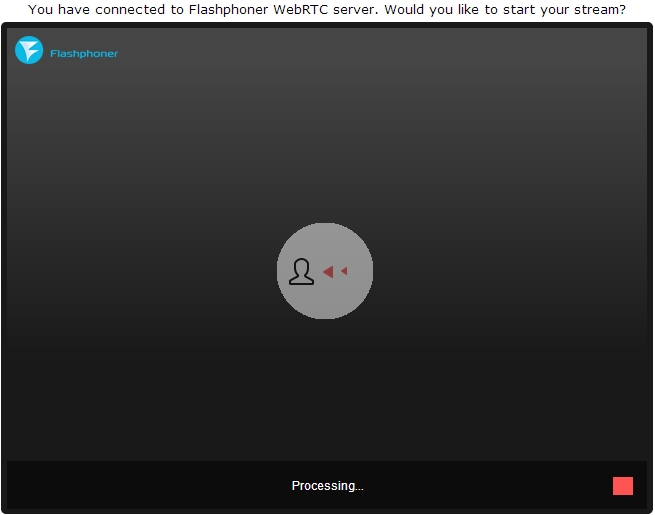

In order to check the WEBRTC technology in the case and run a simple online broadcast on it, we used the server on Flashphoner Webrtc Media & Broadcasting Server. In the features, the ability to broadcast WebrTC streams in one to many mode "(one-to-maany), as well as support for IP cameras and video surveillance systems through the RTSP protocol; In this review, we focus on Web Web broadcasts and their features.

Installing WEBRTC Media & Broadcasting Server

Since the server version of the server has not been for Windows, and I did not want to install VMware + Linux virtual type, test online broadcasts on home Windows Computer Did not work out. In order to save time decided to take instances on a cloud hosting like this:

It was CentOS x86_64 version 6.5 without any pre-installed software in the data center of Amsterdam. Thus, all that we have received is available is the server and SSH access to it. For those who are familiar with console teams Linux, the installation of the WebRTC server promises to pass simply and painlessly. So what we did:

1. Download Archive:

$ wget https: //syt/download-wcs5-server.tar.gz

2. Unpack

$ tar -xzf download-wcs5-server.tar.gz

3. Install:

$ CD FlashphonerwebcallServer.

During the installation of entering the IP address of the server: xxx.xxx.xxx.xxx

4. Activate the license:

$ CD / USR / Local / FlashphonerwebCallServer / Bin

$. / Activation.sh

5. Run WCS server:

$ Service WebCallServer Start

6. Check the log:

$ tail - f /usr/local/flashphonerwebcallserver/logs/flashphoner_manager.log

7. Check that two processes in place:

$ PS AUX | Grep Flashphoner.

The installation process is completed.

Testing WEBRTC online broadcasts

Testing broadcasts turned out to be unaccomplished. In addition to the server, there is a Web client that consists of a dozen JavaScript, HTML and CSS files and was deployed to the / VAR / WWW / HTML folder at the installation phase. The only thing that had to be done is to enter the IP address of the server to config FlashPhoner.xml so that the Web client can connect to the HTML5 WebSockets server. We describe the testing process.

1. Open the index.html test client page in Chrome browser:

2.

In order to start the broadcast, you need to click the "Start" button in the middle of the screen.

Before you do, you need to make sure that the webcam is connected and ready to work. Special requirements There is no webcam, we, for example, used a standard camera built into a laptop with a resolution of 1280 × 800.

Chrome browser will definitely ask access to the camera and microphone in order for the user to understand that his video will be sent to the Internet server and allowed it to do.

3. The interface is a successful broadcast of the video stream from the camera on the WEBRTC server. In the upper right corner, the indicator indicates that the flow goes to the server, in the bottom corner the STOP button to stop sending video.

Pay attention to the link in the bottom field. It contains the unique identifier of this thread, so anyone can join the viewing. It is enough to open this link in the browser. To copy it to the clipboard, you need to click on the "Copy" button.

In real applications, such as webinars, lectures, online broadcasts or interactive TV developers will have to implement the distribution of this identifier to certain groups of viewers in order for them to connect to the desired flows, but this is the logic of the application. WebrTc Media & Broadcasting Server it does not affect, but only distributing video.

5. The connection is established and the viewer sees the flow on the screen. Now he can send a link to someone else, stop the flow to reproduce or turn on full screen modeUsing controls in the lower right corner.

WEBRTC Test Results Online Translation Server

During tests, the delay looked perfect. Ping to the data center amounted to about 100 milliseconds and the delay was not distinguishable by the eye. From here, it can be assumed that the real delay is the same 100 plus minus several tens of milliseconds for buffering time. If you compare with Flash video: in such tests, Flash does not behave as good as webrTC. So, if on a similar network to move your hand, then the movement on the screen can only be seen through one / two seconds.

Regarding quality, we note that on the movements sometimes you can distinguish cubes. This corresponds to the nature of the VP8 codec and its main task - to provide a video connection in real time with acceptable quality and without delays in communication.

The server is easy enough to install and configure, it does not require any serious skills to start it, except Linux's knowledge at an advanced user level that can execute commands from the console via SSH and use text editor. As a result, we managed to establish online broadcasting One-to-Many between browsers. Connecting additional viewers to the stream also did not cause problems.

The broadcast quality turned out to be quite acceptable for webinars and online broadcasts. The only thing that caused some questions is the video resolution. The camera supports 1280 × 800, but the resolution on the test picture is very similar to 640 × 480. Apparently, this question must be clarified by the developers.

Video Testing Broadcast From Web Camera

via webrtc server

Webrtc. (Web Real-Time Communications) is a technology that allows WEB applications and sites to capture and selectively transmit audio and / or video media streams, as well as exchange arbitrary data between browsers, without mandatory use of intermediaries. A set of standards that includes WEBRTC technology allows you to exchange data and carry out peerging teleconference, without having to install plugins or any other third-party software.

WEBRTC consists of several interconnected program interfaces (APIs) and protocols that work together. The documentation you find here will help you understand the basics of WebrTC, how to configure and use a connection to data and media stream, and much more.

Compatibility

Since, the implementation of the WebrTC is to be in the process of becoming and each browser has both WEBRTC functions, we strongly recommend using the Adapter.js polyfil library, from Google, before working on your code.

Adapter.js uses wedges and polyphilants for smooth docking differences in WeBRTC implementations among contexts that support it. Adapter.js also processes manufacturers' prefixes, and other differences in properties naming, facilitating the development process on WEBRTC, with the most compatible result. The library is also available as a NPM package.

To further explore the Adapter.js library, we look at.

Concepts and use of WeBRTC

WEBRTC is multipurpose and together with, provide powerful multimedia features For Web, including support for audio and video conferences, file sharing, screen capture, identification management, and interaction with outdated telephone systems, including support for the transmission of signals of the DTMF tone dialing signals. Connections between nodes can be created without the use of special drivers or plug-ins, and often without intermediate services.

The connection between the two nodes is represented as the RTCPeerConnection interface object. As soon as the connection is established and open, using the RTCPeerConnection object, MediaStream S) and / or Data Channels (RTCDataChannel S) can be added to the connection.

Media streams may consist of any number of tracks (tracks) of media information. These tracks are represented by the MediaStreamTrack interface objects and may contain one or more types of media languages, including audio, video, text (such as subtitles or heads of chapters). Most threads consist at least, only one of the audio track (one audio track), or video tracks, and can be sent and obtained as streams (media trivial) or stored in a file.

Also, you can use the connection between the two nodes to exchange arbitrary data using the RTCDataChannel interface object, which can be used to transfer service information, stock data, game status packages, transfer files or closed data channels.

more Details and Links to Relevant Guides and Tutorials Needed

Webrtc Interfaces

Due to the fact that WEBRTC provides interfaces working together to perform various tasks, we divided them into categories. See the alphabetic side panel pointer for quick navigation.

Connection and Management

These interfaces are used to configure, open and control WebRTC connections. They represent single-level media connections, data channels, and interfaces used by exchanging information about the capabilities of each node to select the best configuration when installing a double-sided multimedia connection.

RTCPeerConnection Represents WebrTc Between local computer and remote node. Used to handle successful data transmission between two nodes. RTCSessionDescription presents session parameters. Each RTcSessionDescription contains descriptions of the type showing what part of the (offer / response) of the negotiation process, it describes, and the SDP session SDP. RTCicecandidate is a candidate for installing an Internet connection (ICE) for setting RTCPeerConnection connections. RTCicetransport provides information about the Internet connection tool (ICE). RTCPeerConnectioniceEvent represents events that occur with Candidates ICE usually RTCPeerConnection. One type is transmitted to this Event Object: Icecandidate. RTCRTPSender controls the crooked and transmission of data through the MediaStreamTrack object for the RTCPeerConnection object. RTCRTPReceiver manages receipt and decoding data through the MediaStreamTrack object for the RTCPeerConnection object. RTCTRACKEVENT indicates that the new incoming MEDiaStreamTrack object is created and an object of type RTCRTPreceiver was added to the RTCPeerConnection object. RTccertificate presents a certificate that uses the RTCPeerConnection object. RTCDataChannel represents a two-permed data channel between two connections nodes. RTCDATACHANNELEvent represents events that occur when the object of type RTCDataChannel is attached to the RTCPeerConnection DataChannel object object. RTCDTMFSender controls the coding and transmission of twonal multi-frequency (DTMF) alarm for the object of type RTCPeerConnection. RTCDTMFToneChangeEvent Indicates an incoming event to change the tone of the darton multi-frequency alarm (DTMF). This event does not pop up (unless otherwise indicated) and is not canceled (unless otherwise indicated). RTCSTATISReport Assinchronously reports status for the transmitted MediaStreamTrack object. RTcidentityProviderRegistrar registers identification provider (IDP). RTcidentityProvider activates the browser's ability to request the creation or verification of identification obligations. RTcidentityAssertion represents the identifier of the remote node of the current connection. If the node is not yet installed and confirmed, the link to the interface will return NULL. After installation does not change. RTcidentityEvent represents an event object declaving identifier identification provider (IDP). Object type RTCPeerConnection. One type is transmitted to this event IdentityResult. RTcidentityerRorevent represents an error event object associated with identification provider (IDP). Object type RTCPeerConnection. Two types of errors are transmitted to this event: IDPassertError and IDPValidationError.Manual

Overview of the WEBRTC architecture under the API, which is used by the developers to create and use WeBRTC, is a set network Protocols and compound standards. This review is the showcase of these standards. WEBRTC allows you to organize a connection in the node mode for transmitting arbitrary data, audio, video streams or any combination in the browser. In this article we take a look at the life of the WebRTC session, starting with the installation of the connection and go through the entire path before it is completed when it is no longer needed. WEBRTC WEBRTC WEBRTC Overview consists of several interconnected programming interfaces (APIs) and protocols that work together to provide support for data exchange and media flows between two or more nodes. This article presents a brief overview of each of these APIs and what purpose he pursues. WEBRTC Basics This article will hold you through creating a cross-browser RTC application. By the end of this article, you must have a working data and media channel operating in point-to-point mode. WebRTC protocols in this article presents protocols, in addition to which API WEBRTC has been created. This guide describes how you can use the unit node and associatedMost material in Webrtc. Focused on the applied level of writing code and does not contribute to the understanding of the technology. Let's try to deepen and find out how the connection is happening, what is a session handle and candidates for what you need Stun. and Turn Server.

Webrtc.

Introduction

WEBRTC - browser-oriented technology that allows you to connect two clients for video data transmission. Main features - Internal support for browsers (I do not need third-party introduced type technologies adobe Flash. ) and the ability to connect customers without using additional servers - connection peer-to-peer (Further, p2P.).

Establish a connection p2P. - a rather difficult task, as computers do not always have public IP Addresses, that is, addresses on the Internet. Due to a small amount IPv4. Addresses (and for security purposes) mechanism was developed NAT.which allows you to create private networks, for example, for home use. Many home routers are now supported. NAT. And thanks to this, all home devices have access to the Internet, although Internet providers usually provide one IP address. Public IP Addresses are unique on the Internet, and private notes. Therefore connect p2P. - difficult.

In order to understand it better, consider three situations: both nodes are on the same network (Picture 1)Both nodes are in different networks (one in private, other in public) (Figure 2) and both nodes are in different private networks with the same IP Addresses (Figure 3).

Figure 1: Both nodes on the same network

Figure 2: Nodes in different networks (one in private, other in public)

Figure 2: Nodes in different networks (one in private, other in public)

Figure 3: Nodes in different private networks, but with numerically equal addresses

Figure 3: Nodes in different private networks, but with numerically equal addresses

In the figures above the first letter in double symbolic notation means the type of unit (P \u003d peer., R \u003d. router.). In the first drawing, the situation is favorable: the nodes in their network are quite identified by network IP addresses and therefore can be connected directly to each other. In the second drawing we have two different networks, which have similar numeration of nodes. Here are routers (routers), who have two network interfaces - within their network and outside their network. So they have two IP Addresses. Conventional nodes have only one interface through which they can communicate only in their network. If they transmit data to someone outside their network, then only with NAT. inside the router (router) and therefore visible to others under IP The address of the router is their external IP address. So the node p1 there is interior IP = 192.168.0.200 and external IP = 10.50.200.5 Moreover, the last address will be external also for all other nodes on its network. Similar situation and for a node p2.. Therefore, their connection is impossible if they only use their internal (their own) IP Addresses. You can use external addresses, that is, router addresses, but, since all nodes in one private network, the same external address is pretty difficult. This problem is solved using the mechanism NAT.

What will happen if we still decide to connect nodes through their internal addresses? The data will not go beyond the network. To enhance the effect, you can imagine the situation depicted in the last figure - in both nodes coincide internal addresses. If they use them for communication, each node will communicate with himself.

Webrtc. Successfully copes with such problems using the protocol ICE, which is true, requires the use of additional servers ( Stun., Turn). About all this below.

Two phases webrTc.

To connect two nodes through the protocol Webrtc. (or simply RTCif two are binding iPhone.'A) It is necessary to carry out some preliminary actions to establish a connection. This is the first phase - setting the connection. The second phase is the transfer of video data.

Immediately it is worth saying that, at least technology Webrtc. In its work uses many different ways Communication ( TCP. and UDP.) and has flexible switching between them, this technology does not have a protocol for transmitting connection data. Not surprising, after all, connect two nodes p2P. Not so easy. Therefore, it is necessary to have some additional Data transfer method, in no way associated with Webrtc.. It can be a socket transmission, protocol Http.it may even be a protocol SMTP. Or Russian Post. This transmission mechanism primary Data is called signal. You need to pass not so much information. All data is transmitted as text and are divided into two types - SDP. and Ice Candidate.. The first type is used to establish a logical compound, and the second for physical. In detail about all this later, but only it is important to remember that Webrtc. It will give us some information that will need to convey to another node. As soon as we give all necessary information, knots will be able to connect and more our help will not be needed. Thus, the signal mechanism that we must implement separately, will be used only when connected, and when transmitting video data will not be used.

So, consider the first phase - the connection phase of the connection. It consists of several items. Consider this phase first for a node that initiates the connection, and then for the waiting.

- Initiator (caller - caller):

- Offer Start Video Data Transfer (CreateOffer)

- Obtaining your SDP. SDP.)

- Getting yours Ice Candidate. Ice Candidate.)

- Waiting call ( callee.):

- Getting a local (your) media stream and install it for transmission (getUsermediaStream)

- Getting a sentence to start video transmission data and the creation of an answer (CreateAnswer)

- Obtaining your SDP. object and transmission through the signal mechanism ( SDP.)

- Getting yours Ice Candidate. objects and passing them through the signal mechanism ( Ice Candidate.)

- Getting a remote (alien) media stream and displaying it on the screen (onadstream)

Difference only in the second paragraph.

Despite the seemingly confusion of steps here, there are actually three: sending its media stream (clause 1), setting the compound parameters (PP.2-4), obtaining someone else's stream (p.5). The most complicated is the second step, because it consists of two parts: establishment physical and logical Connections. The first indicates wayThrough which packages should go to get from one network node to another. The second indicates video / Audio Parameters - What to use the quality to use codecs.

Mentally stage createOffer. or createanswer. It should be connected to the transmission stages SDP. and Ice Candidate. objects.

Main essences

Media streams (MediaStream)

The main essence is the media stream, that is, the flow of video and audio data, the picture and sound. Media streams are two types - local and deleted. Local receives data from input devices (camera, microphone), and remote over the network. Thus, each node has a local, and remote stream. IN Webrtc. For streams there is an interface MediaStream and also there is a subinterface LocalmediaStream Especially for local flux. IN Javascript. You can only encounter first, and if you use libjinglethen you can face second.

IN Webrtc. There is a rather confusing hierarchy inside the stream. Each thread may consist of several media tracks ( MediaTrack), which in turn can consist of several media channels ( MediaChannel.). And the media streams themselves may also be somewhat.

Consider everything in order. For this we will keep some example in mind. Suppose that we want to transmit not only the video of ourselves, but also the video of our table, on which a piece of paper lying on which we are going to write something. We will need two videos (we + table) and one audio (we). It is clear that we and the table should be divided into different streams, because these data probably weakly depend on each other. So we will have two MediaStream'A is one for us and one for the table. The first will contain both video and audio data, and the second is video only (Figure 4).

Figure 4: Two different media streams. One for us one for our table

Figure 4: Two different media streams. One for us one for our table

It is immediately clear that the media stream at least should include the ability to contain data from different types - video and audio. This is taken into account in technology and therefore each type of data is implemented through the media track MediaTrack. Media track has a special property. kind.which determines that in front of us - video or audio (Figure 5)

Figure 5: Media streams consist of media tracks

Figure 5: Media streams consist of media tracks

How will everything happen in the program? We will create two media streams. Then create two video tracks and one audio track. We get access to cameras and microphone. We specify each track which device to use. Add a video and audio track into the first media stream and video track from another camera in the second media stream.

But how do we distinguish the media streams at the other end of the connection? For this, each media flow has a property. label - Flow mark, its name (Figure 6). The same property has a media track. Although at first glance it seems that video from sound can be distinguished by other ways.

Figure 6: Media streams and tracks are identified by tags

Figure 6: Media streams and tracks are identified by tags

So, and if the media tracks can be identified after the label, then why should we use two media streams for our example, instead of one? After all, you can transfer one media stream, and the tracks use different paths. We reached the important property of the media streams - they synchronize Media tracks. Different media streams are not synchronized with each other, but within each media stream all tracks play at the same time.

Thus, if we want our words, our emotions on the face and our sheets of paper are reproduced at the same time, it is worth using one media stream. If this is not so important, then it is more profitable to use different streams - the picture will be more smooth.

If some track must be disconnected during transmission, you can use the property. enabled. Media tracks.

At the end it is worth thinking about stereo sound. As you know, stereo sound is two different sounds. And it is necessary to transmit them separately. To do this, channels are used. MediaChannel.. The media sound path can have many channels (for example, 6, if you need a sound 5 + 1). Inside the media track channels, of course too synchronized. For video, only one channel is usually used, but several, for example, for advertising are used.

Summarizing: We use media stream to transfer video and audio data. Inside each media stream, data is synchronized. We can use several media streams if we do not need synchronization. Inside each media stream there is a media track of two types - for video and for audio. The tracks are usually no more than two, but maybe more if you need to transmit several different videos (interlocutor and its tables). Each track may consist of several channels, which is usually used only for stereo sound.

In the simplest situation of the video chat, we will have one local media stream, which will consist of two tracks - video tracks and audio tracks, each of which will consist of one main channel. The video track is responsible for the camera, the audio track is per microphone, and the media stream is the container of them both.

Session Descriptor (SDP)

W. different computers There will always be different cameras, microphones, video cards and other equipment. There are many parameters with which they possess. All this must be coordinated for media data transmission between two network nodes. Webrtc. Does it automatically and creates a special object - session descriptor SDP.. Pass this object to another node, and you can transmit media data. Only with the connection with another node is not yet.

To do this, use any signaling mechanism. SDP. You can convey at least through the sockets, at least a person (inform it with another node by phone), even by mail of Russia. Everything is very simple - you will be given ready SDP. And it needs to be sent. And when receiving on the other side - to transfer to the department Webrtc.. The session handle is stored as text and can be changed in its applications, but, as a rule, it is not necessary. As an example, when connecting, the desktophenefon is sometimes required to be forced to select the desired audio codec.

Usually, when the connection is set, you must specify some address, for example URL. There is no need for this, since through the signaling mechanism you yourself send the destination data. To indicate Webrtc.what we want to install p2P. The connection you want to call the CREATEOFFER function. After calling this feature and instructions to her special callback'A will be created SDP. object and transferred to the same callback. All that is required from you is to transfer this object over the network to another node (interlocutor). After that, at the other end, the data will come through the signal mechanism, namely this SDP. an object. This session descriptor for this node is someone else and therefore carries useful information. Getting this object is a signal to the beginning of the connection. Therefore, you must agree to this and call the CREATEANSWER function. She is a complete analogue of CreateOffer. Again in your callback A local session descriptor will be passed and it will be necessary to transfer to the signaling mechanism back.

It is worth noting that it is possible to call the CreateAnswer function only after receiving someone else's SDP. object. Why? Because local SDP. The object that will be generated when calling CreateAnswer must rely on the remote SDP. an object. Only in this case it is possible to coordinate your video settings with the settings of the interlocutor. It is also not worth calling CreateAnswer and CreateOffer before receiving a local media stream - they will have nothing to write in SDP. an object .

As B. Webrtc. It is possible to edit SDP. Object, after receiving the local descriptor, it must be installed. It may seem a bit strange that you need to transmit Webrtc. The fact that she herself gave us, but the protocol. When you receive a remote descriptor, you also need to be installed. Therefore, you must install two descriptors on one node - your own and someone else (that is, local and remote).

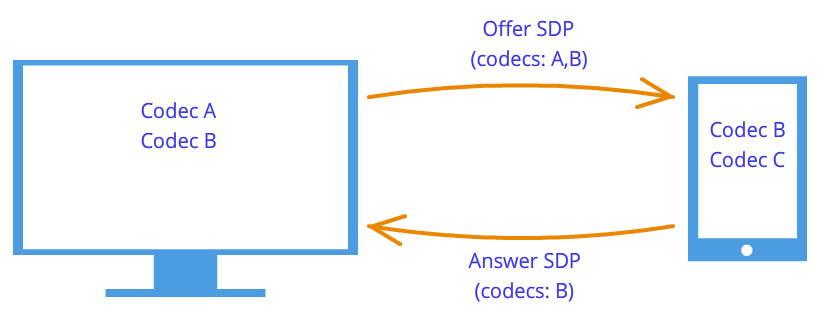

After this handshake The nodes know about the wishes of each other. For example, if the node 1 Supports codecs A. and B., and node 2 Supports codecs B. and C., since each node knows its own and someone else's descriptors, both nodes will choose a codec B. (Figure 7). The connection logic is now installed, and the media streams can be transmitted, but there is another problem - the nodes are still associated with a signaling mechanism.

Figure 7: Codec coordination

Figure 7: Codec coordination

Candidates (Ice Candidate)

Technology Webrtc. Tries to confuse us with its new methodology. When the connection is installed, the address of that node is not specified with which you need to connect. Installed first logical connection and not physicalAlthough it was always done on the contrary. But it will not seem strange if you do not forget that we use third-party signaling mechanism.

So, the connection is already installed (logical connection), but there is no path to which network nodes can transmit data. It's not so easy here, but let's start with a simple. Let nodes are in one private network. As we already know, they can easily connect with each other in their inner IP addresses (or maybe for some other, if not used TCP / IP.).

Through some callback'and Webrtc. Tell us Ice Candidate. Objects. They also come in text form and also, as with session descriptors, they need to simply forward through the signaling mechanism. If the session descriptor contained information about our installations at the level of the chamber and microphone, then candidates contain information about our location on the network. Transfer them to another node, and he will be able to physically connect with us, and since it already has a session descriptor, it is logically able to connect and the data "flow". If he does not forget send us and his object of the candidate, that is, information about where he is in the network itself, then we can connect with it. Note here another difference from the classic client-server interaction. Communication with the HTTP server occurs according to the query-response scheme, the client sends data to the server, he processes them and sends them the address specified in the request package. IN Webrtc. Need to know two addresses and connect them from two sides.

The difference from session descriptors is that only remote candidates are needed. Editing here is prohibited and can not bring any benefit. In some implementations Webrtc. Candidates must be installed only after installing session descriptors.

And why was the session descriptor alone, and maybe there may be a lot of candidates? Because the location on the network can be determined not only by its inner IP address, but also the external address of the router, and not necessarily one, as well as addresses Turn Servers. The rest of the paragraph will be devoted to the detailed consideration of candidates and how to connect nodes from different private networks.

So, two nodes are on the same network (Figure 8). How to identify them? Via IP addresses. No other way. True, you can still use different transports ( TCP. and UDP.) and different ports. This is the information that is contained in the object of the candidate - IP, Port., Transport. And some other. Let, for example, used UDP. Transport I. 531 port.

Figure 8: Two nodes are on the same network.

Then, if we are in the node p1T. Webrtc. give us such an object of the candidate - . There is no accurate format here, but only a scheme. If we are in the node p2., then the candidate is . Through alarm mechanism p1 Get candidate p2. (that is the location of the node p2., namely his IP and Port.). Then p1 can connect S. p2. directly. More correct, p1 will send data to the address 10.50.150.3:531 In the hope that they will reach p2.. No matter if this address belongs to the node p2. Or some intermediary. It is only important that through this address data will be sent and can achieve p2..

While the nodes on the same network - everything is simple and easy - each node has only one object of the candidate (always meaning its own, that is, its location on the network). But candidates will be much more when knots will be in different networks.

Let us turn to a more complex occasion. One node will be behind the router (more precisely, for NAT), and the second node will be on the same network with this router (for example, on the Internet) (Figure 9).

Figure 9: One node for NAT, Other No

Figure 9: One node for NAT, Other No

This case has a particular solution to the problem we now and consider. Home router Usually contains table NAT.. This is a special mechanism designed to ensure that the nodes inside the private network of the router can handle, for example, to websites.

Suppose that the web server is connected to the Internet directly, that is, has a public IP* address. Let it be a knot p2.. Knot p1 (web client) send request to address 10.50.200.10 . First, the data falls on the router r1, or rather on his interior interface 192.168.0.1 . After that, the router remembers the address of the source (address p1) and enters it into a special table NAT., then changes the source address to its ( p1 → r1). Next, in its own way exterior The router interface sent data directly to the web server p2.. The web server processes the data generates the answer and sends back. Sends Router r1Since it is he who stands in the opposite address (the router replaced the address to his). Router gets data, looks into the table NAT. and forwards these nodes p1. Router acts here as an intermediary.

And what if several nodes from the internal network simultaneously turn to the external network? How the router will understand who to send the answer back? This problem is solved with portov. When the router replaces the address of the node to its, it also replaces the port. If two nodes appeal to the Internet, the router replaces their source ports on different. Then, when the package from the web server comes back to the router, the router will understand the port to whom appointed current Package. Example below.

Return to technology Webrtc., or rather, to its part that uses ICE Protocol (from here and ICE Candidates). Knot p2. has one candidate (its location in the network - 10.50.200.10 ), and the node p1which is behind the router with NAT will have two candidates - local ( 192.168.0.200 ) and the candidate of the router ( 10.50.200.5 ). The first is not useful, but it is nevertheless generated, since Webrtc. It does not know anything about the remote node - it can be in the same network, and maybe not. The second candidate will come in handy and, as we already know, the port will play an important role (to go through NAT.).

Record in the table NAT. It is generated only when the data comes out of the internal network. Therefore, the node p1 Must be the first to transfer data and only after this node p2. will be able to get to the node p1.

On practice both nodes will be in NAT.. To create an entry in the table NAT. Each router, the nodes must send something to the remote node, but this time neither the first cannot get to the second nor the opposite. This is due to the fact that the nodes do not know their external IP Addresses, and send data to internal addresses meaningless.

However, if the external addresses are known, the connection will be easily installed. If the first node is deployed data on the second node router, then the router ignores them, since its table NAT. While empty. However, in the first node router in the table NAT. A record needed. If the second node is now sending data to the first node router, the router will successfully transmit them to the first node. Now and table NAT. The second router needs data.

The problem is that to learn your external IP Address, you need a node located in a common network. To solve such a problem, additional servers are used, which are connected to the Internet directly. With their help, cherished entries are also created in the table. NAT..

Stun and Turn Server

When initializing Webrtc. You must specify available Stun. and Turn servers that will be called in the future ICE servers. If the servers are not specified, only nodes can be connected on the same network (connected to it without NAT.). Immediately it is worth noting that for 3G.-set necessarily use Turn Servers.

Stun. server - This is just a server on the Internet that returns the return address, that is, the address of the sender's assembly. A node coming by the router appeals to Stun. Server to go through NAT.. Packaged Stun. Server, contains the source address - the address of the router, that is, the external address of our node. This adress Stun. Server and sends back. Thus, the knot gets its external IP Address and port through which it is available from the network. Further, Webrtc. With this address creates an additional candidate (the external address of the router and the port). Now in the table NAT. The router has an entry that skips the packets sent to the router by the desired port to our node.

Consider this process on the example.

Example (Work Stun Server)

Stun. The server will be denoted by s1.. Router, as before, through r1, and the knot - through p1. It will also be necessary to follow the table NAT. - We denote it as r1_NAT.. Moreover, this table usually contains many records from different subnet nodes - they will not be brought.

So at the beginning we have an empty table r1_NAT..

Table 2: Package header

Knot p1 Sends this package router r1 (It does not matter how different technologies can be used in different subnets). Router needs to be replaced by the source address SRC IP.Since the address specified in the package is not suitable for the external subnet, moreover, the addresses from this range are reserved, and no address on the Internet has such an address. Router makes the substitution in the package and creates new record in its table r1_NAT.. To do this, he needs to come up with the port number. Recall that since several nodes inside the subnet can access the external network, then in the table NAT. Must be stored additional InformationSo that the router can determine which of these multiple nodes is the reverse package from the server. Let the router invented the port 888 .

Changed Package Title:

Table 4: NAT table replenished with a new recording

Here IP The address and port for the subnet is absolutely the same as the source package. In fact, when reverse gear, we must have a way to fully restore them. IP The address for the external network is the address of the router, and the port has changed to the router invented.

This port on which the node p1 takes connection - this is, of course, 35777 but server ship data on fictitious port 888 which will be changed by the router to the present 35777 .

So, the router replaced the address and port of the source in the packet header and added an entry to the table NAT.. Now the package is sent over the network server, that is, the node s1.. At the entrance s1. It has such a package:

| SRC IP. | SRC Port. | Dest IP. | Dest Port. |

|---|---|---|---|

| 10.50.200.5 | 888 | 12.62.100.200 | 6000 |

Table 5: Stun server received a package

TOTAL, Stun. The server knows that he has come a package from the address 10.50.200.5:888 . Now this address server sends back. It is worth staying and seeing once again that we have just considered. The tables above are a piece of header package not at all of his content. We did not speak about content, because it is not so important - it is somehow described in the protocol Stun.. Now we will consider in addition to the title also content. It will be simple and contain the address of the router - 10.50.200.5:888 Although we took it from header Package. This is done not often, usually the protocols are not important information about the addresses of nodes, it is important only that the packages are delivered for their intended purpose. Here we consider the protocol that sets the path between two nodes.

So, now we have a second package that goes in the opposite direction:

Table 7: Stun server sends a package with such content

Next, the package travels over the network until it turns out to be on the external interface of the router r1. The router understands that the package is not intended to him. How does he understand that? It can only be found in the port. Port 888 It does not use for his personal goals, but uses for the mechanism NAT.. Therefore, in this table, the router looks. Looks at the column External Port. and looking for a string that coincides with Dest Port. from the submitted package, that is 888 .

| INTERNAL IP. | Internal Port. | External IP. | External Port. |

|---|---|---|---|

| 192.168.0.200 | 35777 | 10.50.200.5 | 888 |

Table 8: NAT Table

We were lucky, such a line exists. If it were not lucky, the package would simply be thrown away. Now you need to understand who from the subnet nodes it is necessary to send this package. Do not hurry, let's remember the importance of ports in this mechanism again. At the same time, two nodes in the subnet could send requests to the external network. Then, if the router came up for the first node 888 , then for the second he would come up with the port 889 . Suppose that it happened, that is, the table r1_NAT. looks like that:

Table 10: Router replaces the address of the receiver

| SRC IP. | SRC Port. | Dest IP. | Dest Port. |

|---|---|---|---|

| 12.62.100.200 | 6000 | 192.168.0.200 | 35777 |

Table 11: Router replaced the receiver address

Package successfully comes to the node p1 And, looking at the contents of the package, the knot learns about his external IP Address, that is, about the address of the router in the external network. He also knows the port that the router passes through NAT..

What's next? What is the benefit of this? Use is a record in the table r1_NAT.. If now anyone will send to the router r1 Package with port 888 then the router will redirect this package node p1. Thus, a small narrow passage to the hidden node was created p1.

From the example above, you can get some idea of \u200b\u200bthe work. NAT. and essence Stun. Server. Generally, the mechanism ICE and Stun / Turn Servers are just aimed at overcoming restrictions NAT..

There is no one router between the node and the server, but several. In this case, the node will receive the address of that router, which is the first to go to the same network as the server. In other words, we get the address of the router connected to Stun. Server. For p2P. Communications This is exactly what we need, if you do not forget the fact that the line you need in each router will be added to the table NAT.. Therefore, the opposite will be the same as Iron.

Turn The server is an improved Stun. server. From here it should be immediately removed that any Turn The server can work and how Stun. server. However, there are benefits. If a p2P. Communication is impossible (such as in 3G. networks), the server switches to the repeater mode ( relay), That is, it works as an intermediary. Of course, no about p2P. then it's not coming, but beyond the mechanism ICE Nodes think that they communicate directly.

In what cases are needed Turn server? Why not enough Stun. Servers? The fact is that there are several varieties NAT.. They are equally replaced IP Address and port, however, some of them have additional protection against "falsification". For example, in symmetric Table NAT. 2 more parameters are saved - IP and the remote node port. Package from the external network passes through NAT. In the internal network only if the source address and port coincides with the table recorded in the table. Therefore, Focus S. Stun. The server fails - Table NAT. Stores address and port Stun. servers and when the router gets a package from Webrtc. The interlocutor, he throws him, as he is "falsified." He did not come from Stun. Server.

In this way Turn The server is needed in the case when both interlocutors are for symmetric NAT. (Everyone for its own).

Brief summary

Here are some statements about entities. Webrtc.that must always be kept in your head. In detail, they are described above. If any of the statements will seem to you not fully clear, re-read the relevant paragraphs.

- Media Flow

- Video and audio data are packaged in media streams

- Media streams synchronize media tracks from which consist

- Various media streams are not synchronized with each other.

- Media streams can be local and remote, the camera and microphone are usually connected to the local, deleted receive data from the network in a coded form.

- Media tracks are two types - for video and for audio

- Media tracks have the ability to turn on / off

- Media tracks consist of media channels

- Media tracks synchronize media channels from which consist

- Media streams and media tracks have labels for which they can be distinguished

- Descriptor session

- The session handle is used to logically connect two network nodes.

- The session handle stores information on available video encoding methods and audio data.

- Webrtc. uses an external signaling mechanism - the task of forwarding session descriptors ( sDP.) falls on the application

- The mechanism of the logical compound consists of two stages - proposals ( offer.) and answer ( answer.)

- Generation of the session descriptor is impossible without using a local media stream in the case of suggestion ( offer.) and impossible without using a remote session descriptor in case of an answer ( answer.)

- The resulting descriptor must be reached Webrtc., moreover, it does not matter whether this descriptor is obtained remotely or locally from the same implementation Webrtc.

- There is a small edit of the session descriptor

- Candidates

- Candidate Ice Candidate.) - this is the address of the node in the network

- The address of the node can be yours, and maybe the address of the router or Turn Server

- Candidates are always a lot

- The candidate consists of IP Addresses, port and type of transport ( TCP. or UDP.)

- Candidates are used to establish a physical connection of two nodes in the network.

- Candidates also need to be sent through the signal mechanism

- Candidates also need to transmit implementation Webrtc., however, only remote

- In some implementations Webrtc. Candidates can only be transferred after installing the session descriptor

- STUN / TURN / ICE / NAT

- NAT. - External access mechanism

- Home routers support a special table NAT.

- The router replaces the addresses in the packages - the address of the source to its own, in case the package goes to the external network, and the address of the receiver to the node address in the internal network, if the package came from the external network

- To provide multichannel access to the external network NAT. Uses ports

- ICE - Scanning mechanism NAT.

- Stun. and Turn Servers - Pickup Servers for Bypass NAT.

- Stun. The server allows you to create the necessary entries in the table. NAT.and also returns the external address of the node

- Turn The server generalizes Stun. mechanism and makes it working always

- In the worst cases Turn The server is used as an intermediary ( relay), i.e p2P. turns into client-client client communication.

In this article, I want to tell how using WEBRTC and Bitrix technologies, create your multimedia web application :)

A little about technology

WEBRTC technology appeared relatively recently, the first draft was introduced in November 2012 and literally for the year technology reached a good level and it is already possible to use it.

The technology offers developers the ability to create multimedia web applications (video / audio calls) without having to download and install additional plugins.

Its goal is to build a single platform for real-time communications, which will work in any browser and on any operating system.

Most recently, the list of supported applications was very small and consisted of only one browser: Google Chrome.

Over the past year, this list has expanded significantly and the technology began to support almost all modern browsers :)

At the moment it is: Mozilla Firefox 27+ and based on WebKit browsers - Google Chrome 29+, Opera 18+, Yandex.Browser 13+.

There is hope that Safari will soon be in this list, since the company entered the WEBRTC working group in february 2014..

Unfortunately, Microsoft does not plan to implement WEBRTC and create its CU-RTC-Web technology, but maybe they will make their technology more or less compatible.

For Internet Explorer users, we offer to release a chromium-based desktop application and offer its browsers without supporting this technology.

About how we use WEBRTC technology and the work of the desktop application, I told on Winter Partner Conference 1C-BitrixYou can watch my report online or download video :)

How does WEBRTC work?

After reading the lines above, you most likely have already been missing to do the WebrTC app and practically closed the page :)

But to touch panic!We already have everything in the product, see for yourself:

1. Alarm protocol in real time you can organize on the basis of our Push & Pull and the module for the NGINX server - nginx-push-stream-mode, how to work with them in detail written in my blog on the bitmix (if this option you does not suit, you can easily replace another product, for example on socket.io);

2. To crawl NATA, we have created a cloud service that is available to all product users at turn.calls.bitrix24.com.;

and the most pleasant thing :)

4. We have developed a special component in which all logic is implemented for what you can quickly delve into and start writing your application (the component is available in the Push & Pull module from version 14.1.5);

Run the demo application :)

In the Push & Pull module (/ bitrix / modules / pul /), starting from version 14.1.5, a folder appeared demo. There are currently two examples in it:

1. An example of working with the "Push & Pull" module;

2. Example of working with WebRTC;

About the second just wanted to talk :)

To start work, follow these steps:

1. Copy the component from the folder / Bitrix / Modules / Pull / Demo / Webrtc / Compactants /, for example here / Bitrix / Compactens / YourCompanyPrefix /

2. Copy the page / Bitrix / Modules / Pull / Demo / WebrTC / HTML /, for example, in the root of your site;

3. Configure the Push & Pull module to work with the queue server;

4. Register two users;

Everything, you can now go to this page under two different users and start calling each other :)

The best documentation is the source code

I will briefly describe the purpose of each function, which are used in demo_webrutc.js (located in component), everything else, I hope it will be clear from the source code.

What would be better to understand the component and how it works, read these two articles, it will help you easier to navigate:

Creating your JS library: JS, CSS, phrases, dependencies.

Working with the "Push & Pull" module

WEBRTC: Initialization

YourCompanyPrefix.WebrTC ()

This WEBRTC class class describes the default values \u200b\u200band work with signaling.

Note: BX.garbage will work when leaving the page or reboot, thereby you can cut the call.

Bx.inheritwebrTc (YourCompanyPrefix.WebrTC);

This feature must be performed immediately after initialization, it will post all the basic classes of our BX.WebrTC basic library

WEBRTC: Usermedia API

Yourcompanyprefix.webrTc.startgetUsermedia.

Function for prompt access to video camera and microphone

Yourcompanyprefix.WebrTc.ONUSERMEDIASUCESS.

This feature is called when the event is triggered by the "Successful Access to Equipment" event.

Yourcompanyprefix.webrTc.ONUSERMEDIERROR.

This feature is called when the Error event is triggered by the event

WeBRTC: PeerConnection API

YourCompanyPrefix.webrTc.setlocalandsend.

The function sets the meta-information about the current user and transmits it to another user.

Yourcompanyprefix.webrTc.onremotestreamamadded

This feature is called when an event is triggered by a remote media stream, to display it in the Video Tag

YourCompanyPrefix.WebrTc.Onremotestreamremoved.

This feature is called when the event is triggered by the "Disabled Media-Flow" event, to turn it off in the video tag

Yourcompanyprefix.webrTc.ONICecandidate.

This feature is called when the event is triggered by the "On the need to transmit meta-information about codecs, IP and other information" to another user

YourCompanyPrefix.WebrTc.PeerConnectionerror

The function is called when the connection error occurs between users

YourCompanyPrefix.WebrTc.PeerConnectionReconnect.

The function sends a request to try to reconnect the user to an existing session, for example because of the error

YourCompanyPrefix.WebrTc.DeleteEvents.

The function resets all modified variables for a new call.

WEBRTC: Signaling API

Yourcompanyprefix.webrTc.callinvite.

Function to send an invitation to another user in a video call

Yourcompanyprefix.webrTc.calnswer.

Function to send confirmation to the video call setting

Yourcompanyprefix.webrTc.caldecline.

Function for sending cancellation or video call

YourCompanyPrefix.webrTc.callcommand.

Function for sending other commands to another user (the user is ready to install the connection, the user is busy and so on)

WEBRTC: Basic commands (from the Core_WebrTc.JS library)

Yourcompanyprefix.webrTc.ready.

Check is available WEBRTC in the current browser

Yourcompanyprefix.webrTc.signalingReady.

Check is available to signaling on the current page

Yourcompanyprefix.webrTc.Toggleaudio.

Turning on / off microphone

YourCompanyPrefix.WebrTc.ToggleVideo.

Turn on / off camera

YourCompanyPrefix.WebrTc.ONICEConnectionStateChange.

The function is called when the Connection Installation event is triggered

Yourcompanyprefix.webrTc.OnsignalingStateChange.

The function is called when the "Change Communication Change" event is triggered

YourCompanyPrefix.WebrTC.attachmediaStream

Function for installing video / audio stream in Tag Video

YourCompanyPrefix.WebrTc.log.

Login function

I hope this article will be useful to you.