Calculations on the GPU. GPU-Optimization - Capital Truths

AMD / ATI Radeon architecture features

This is similar to the birth of new biological species, when living beings are evolving in the development of habitats of habitat to improve the adaptability to the medium. So and the GPU, starting with acceleration of rasterization and texturing of triangles, developed additional abilities to perform shader programs for the coloring of these most triangles. And these abilities turned out to be in demand and in illiterate calculations, where in some cases there are significant gains in performance compared to traditional solutions.

We carry out analogy further - after a long evolution on land, mammals penetrated into the sea, where we pushed ordinary marine inhabitants. In competitive struggle, mammals used both new advanced abilities that appeared on the earth's surface and specially acquired to adapt to life in water. Similarly, GPU based on the advantages of architecture for 3D graphics, more and more will be seen by special functionality, useful for the execution of distant charts.

So, what allows GPU to qualify for its own sector in the field of general purpose programs? The GPU microarchitecture is built quite different than that of ordinary CPUs, and certain advantages originally laid in it. Chart tasks assume independent parallel data processing, and the GPU is initially multiplying. But this parallelism is only a joy. The microarchitecture is designed to operate the available large number of threads that require execution.

The GPU consists of several dozen (30 for NVIDIA GT200, 20 - for Evergreen, 16 - for Fermi) processor cores that are called Streaming Multiprocessor in the NVIDIA terminology, and in ATI-SIMD Engine terminology. As part of this article, we will call them mini-processors, because they perform several hundred program threads and know how to almost all the same as the usual CPU, but not all.

Marketing names are confused - in them, for considerable importance, indicate the number of functional modules that can be deducted and multiplied: for example, 320 vector "Cores" (nuclei). These kernels are more like grains. It is better to represent the GPU as a multi-core processor with a large number of cores that are simultaneously performing a variety of threads.

Each miniprocessor has a local memory, the size of 16 kb for GT200, 32 Kb - for Evergreen and 64 Kb - for Fermi (in fact, this is a programmable L1 cache). It has a similar to the first level of the usual CPU access time and performs similar functions of the larger data delivery to functional modules. In the Fermi architecture, part of the local memory can be configured as an ordinary cache. In the GPU, the local memory serves to quickly exchange data between the executing threads. One of the usual GPU-program schemes is: at the beginning of the local memory, data from GPU global memory is loaded. It is just an ordinary video memory, located (as well as system memory) separately from the "its" processor - in case of video it is planted with several chips on the video card textolite. Next, several hundred threads work with these data in local memory and write the result into global memory, after which it is transmitted to the CPU. The programmer's responsibility includes writing instructions for downloading and unloading data from local memory. In fact, this is the separation of the [specific task] data for parallel processing. The GPU also supports atomic records / reading instructions, but they are ineffective and usually in demand at the final stage for "gluing" results of calculating all miniprocessors.

Local memory is common to all the threads performed in the mini-processor, therefore, for example, in NVIDIA terminology, it is even called Shared, and the term Local Memory is indicated by the opposite, namely: some personal area of \u200b\u200ba separate thread in global memory, visible and accessible only to it. But besides local memory in a mini-processor, there is another area of \u200b\u200bmemory, in all architectures about four times large in volume. It is divided equally between all the executing threads, these are registers for storing variables and intermediate results of calculations. Each thread accounts for several dozen registers. The exact amount depends on how many threads are performed by a miniprocessor. This amount is very important, since the latency of global memory is very large, hundreds of clocks, and in the absence of caches there are no place to store intermediate results of calculations.

And one more important feature of the GPU: "soft" vector. Each miniprocessor has a large number of computing modules (8 for GT200, 16 for Radeon and 32 for Fermi), but they can all execute only the same instructions with one software address. Operands can be different, different threads have their own. For example, instruction fold the contents of two registers: It is simultaneously executed by all computing devices, but the registers are taken different. It is assumed that all the yaps of the GPU program, carrying out parallel data processing, are generally moving in a parallel course on the program code. Thus, all computing modules are loaded uniformly. And if the threads due to branches in the program partitioned in their way of execution of the code, then the so-called serialization occurs. Then not all computing modules are used, since the threads are submitted to execute various instructions, and the block of computing modules can execute as we said, only instructions with one address. And, of course, the performance decreases in relation to the maximum.

The advantage is that vectorization is fully automatically automatically, it is not programming using SSE, MMX and so on. And the GPU itself processes discrepancies. Theoretically, you can generally write programs for the GPU, without thinking about the vector nature of the executing modules, but the speed of such a program will not be very high. The minus lies in the big width of the vector. It is larger than the nominal number of functional modules, and is 32 for the GPU NVIDIA and 64 for Radeon. The threads are processed by the blocks of the appropriate size. NVIDIA calls this filament block with a term Warp, AMD - Wave Front, that the same thing. Thus, on 16 computing devices "Wave Front" 64 long threads are processed in four clocks (subject to the usual length of the instruction). The author prefers in this case the term Warp, due to the Association with the sea term Warp, denoting the rope-related rope-related ropes. So the threads are "twisted" and form a solid ligament. However, Wave Front can also be associated with the sea: the instructions also arrive to the executive devices, as the waves one after the other rolled ashore.

If all the threads have been equally advanced in the execution of the program (are in one place) and thus perform one instruction, then everything is fine, but if not - slow down. In this case, the threads from one Warp or Wave Front are located in various fields of the program, they are divided into groups of threads that have the same number of the instruction numbers (in other words, the instruction pointer (INSTITION POINTER)). And they are still performed at one point in time only the threads of the same group - everything fulfill the same instruction, but with different operands. As a result, Warp is executed in so many times slower, by how many groups it is broken, and the number of threads in the group does not have. Even if the group consists of all of one thread, it will still be the same as the same time as complete Warp. In the gland, it is implemented by masking certain threads, that is, the instructions are formally executed, but the results of their execution are not recorded anywhere and are not used in the future.

Although at every moment of time, each miniprocessor (Streaming MultiProcessor or SiMD Engine) performs instructions belonging to only one Warp (ties of the threads), it has several dozen active warp in the executable pool. By completing the instructions of a single warp, the miniprocessor performs the instructions of this warp in turn, but the instructions of someone else of the warp. That warp can be in a completely different program location, it will not affect the speed, since only inside the warp instructions of all threads are obliged to be the same for performance at full speed.

In this case, each of the 20 SIMD Engine has four active Wave Front, in each of which 64 threads. Each thread is indicated by a short line. Total: 64 × 4 × 20 \u003d 5120 threads

Thus, given that each WARP or WAVE FRONT consists of 32-64 threads, the miniprocessor has several hundred active threads that are performed almost simultaneously. Below we will see which architectural benefits are such a large number of parallel threads, but first consider which restrictions are the components of the GPU mini-processors.

The main thing is that there is no stack in the GPU where the functions parameters and local variables could be stored. Due to the large amount of threads for the stack, there is simply no place on the crystal. Indeed, since the GPU simultaneously performs the order of 10,000 threads, with the size of the stack of one thread in 100 KB, the total volume will be 1 GB, which is equal to the standard volume of the entire video memory. Especially there is no possibility to place the stack of any significant size in the GPU kernel itself. For example, if you put a 1000 byte of the stack on the thread, then only one mini-processor will require 1 MB of memory, which is almost five times more than the total amount of local memory of the mini-processor and memory allocated to storing registers.

Therefore, there is no recursion in the GPU program, and with features calls it will not be especially unfolded. All functions are directly substituted into the code when compiling the program. This limits the scope of the GPU to the tasks of computing type. Sometimes it is possible to use a limited emulation of the stack using global memory for recursion algorithms with a known small iteration depth, but it is an atypical application of GPU. To do this, it is necessary to specifically develop an algorithm, to explore the possibility of its implementation without a guarantee of successful acceleration compared to the CPU.

In Fermi, for the first time, it was possible to use virtual functions, but again their use is limited to a lack of a large fast cache for each thread. At 1536 threads account for 48 KB or 16 KB L1, that is, virtual functions in the program can be used relatively rarely, otherwise the stack will also use slow global memory, which will slow down the execution and, most likely, will not bring benefits compared to the CPU option.

Thus, the GPU is represented as a computing coprocessor in which the data is loaded, they are processed by some algorithm, and the result is issued.

Advantages of architecture

But considers GPU very quickly. And in this he helps his high multiplowiness. A large number of active threads allows partly to hide the greater latency located separately global video memory, which makes up about 500 clocks. It is especially well leveled for a code with a high density of arithmetic operations. Thus, it does not require expensive from the point of view of transistors hierarchy of L1-L2-L3 cache. Instead of it, there can be many computing modules on the crystal, ensuring outstanding arithmetic performance. In the meantime, the instructions of one thread or warp are fulfilled, the other hundreds of threads are quietly waiting for their data.

In Fermi, a second-level cache was introduced with a size of about 1 MB, but it cannot be compared with the caches of modern processors, it is longer for communication between nuclei and various software tricks. If its size is divided between all dozens of thousands of threads, each will have to be at all insignificant volume.

But in addition to the latency of global memory, there are still many latent latent in the computing device that you need to hide. It is latency of data transfer inside the crystal from the computing devices to the first-level cache, i.e., the Local GPU memory, and to registers, as well as the instructions cache. The register file, like the local memory, is located separately from the functional modules, and the speed of access to them is about a century of the taway. And again a large number of threads, active warp, makes it possible to effectively hide this latency. Moreover, the total bandwidth (bandwidth) of access to the local memory of the entire GPU, taking into account the number of components of its mini-processors, is much larger than the Bandwidth of the first-level cache access in modern CPUs. GPU can recycle significantly more data per unit of time.

You can immediately say that if the GPU is not provided with a large number of parallel threads, it will have almost zero performance, because it will work with the same pace, as if it is fully loaded, but to perform a much smaller work. For example, let it be only one instead of 10,000 threads: performance will fall about a thousand times, for not only not all blocks are loaded, but all latency will affect.

The problem of concealing latency of the Ortre and for modern high-frequency CPUs, sophisticated ways are used to eliminate it - deep pipelines, extraordinary execution of instructions (OUT-OF-ORDER). This requires complex planners of the execution of instructions, various buffers, etc., which takes place on the crystal. It is all required for best performance in single-threaded mode.

But for GPU, all this is not necessary, it is architecturally faster for computational problems with a large number of threads. But it converts multithreading in performance, as a philosopher's stone turns lead into gold.

The GPU was initially adapted for the optimal execution of shader programs for pixels of triangles, which are obviously independent and can be executed in parallel. And from this state, he evolved by adding different capabilities (local memory and addressable access to video memory, as well as the complications of the instruction set) in a very powerful computing device, which can still be effectively applied only for algorithms that allow high-parallel implementation using a limited Local volume Memory.

Example

One of the most classic tasks for GPU is the task of calculating the interaction of N bodies, creating a gravitational field. But if we, for example, need to calculate the evolution of the Earth-Moon-Sun system, then the GPU is a bad assistant: few objects. For each object, it is necessary to calculate interactions with all other objects, and there are only two of them. In the event of the movement of the solar system with all the planets and their lunas (about a pair of hundreds of objects), the GPU is still not too effective. However, the multi-core processor due to high overhead flows for flow control either will also not be able to show all its power, will work in one-threaded mode. But here if you also need to calculate the trajectories of comets and objects of the asteroid belt, it is already a task for the GPU, since objects are enough to create the required number of parallel calculation flows.

GPU also shifts himself well if it is necessary to calculate the collision of ball clusters from hundreds of thousands of stars.

Another opportunity to use the GPU power in the task n bodies appears when it is necessary to calculate a variety of individual tasks, even with a small amount of tel. For example, if you want to calculate the variants of the evolution of one system at various options for initial speeds. Then effectively use the GPU will succeed without problems.

AMD Radeon microarchitecture details

We reviewed the basic principles of the GPU organization, they are common to video accelerators of all manufacturers, since they initially had one target task - shader programs. However, manufacturers have found the opportunity to disperse in the details of microarchctural implementation. Although CPUs of various vendors are sometimes very different, even being compatible, such as, for example, Pentium 4 and Athlon or Core. The NVIDIA architecture is already well known, now we will look at Radeon and highlight the main differences in the approaches of these vendors.

AMD video cards received full support for general purpose computing starting from the Evergreen family, in which the DirectX 11 specification was also implemented for the first time. 47xx family cards have a number of significant restrictions that will be discussed below.

Differences in the size of local memory (32 KB of Radeon vs. 16 KB in GT200 and 64 KB in Fermi) are generally not fundamental. Like the size of Wave Front in 64 threads at AMD against 32 threads in Warp at NVIDIA. Almost any GPU program can be easily reconfigured and configured to these parameters. Performance may change to dozens percent, but in the case of GPU it is not so fundamentally, for the GPU program usually works ten times slower than analog for CPU, or ten times faster, or does not work at all.

More important is the Using AMD technology VLIW (Very Long Instruction Word). NVIDIA uses scalar simple instructions that operate with scalar registers. Its accelerators implement a simple classic RISC. AMD video cards have the same number of registers as GT200, but the registers are 128-bit registers. Each VLIW instruction operates in several four-component 32-bit registers, which resembles SSE, but VLIW features are much wider. This is not SIMD, as SSE - here instructions for each pair of operands can be different and even dependent! For example, let the components of the register A are called A1, A2, A3, A4; Register B is similar. It can be calculated using one instruction that is performed in one clock, for example, the number A1 × B1 + A2 × B2 + A3 × B3 + A4 × B4 or two-dimensional vector (A1 × B1 + A2 × B2, A3 × B3 + A4 × B4 ).

This was made possible thanks to the lower GPU frequency than the CPU, and a strong decrease in the process in recent years. It does not require any planner, almost everything is performed by the tact.

Thanks to the vector instructions, the Radeon peak performance in single accuracy numbers is very high and already make up Teraflops.

One vector register may instead of four numbers of single accuracy store one number of double accuracy. And one VLIW instruction can either fold two pairs of double numbers, or multiply two numbers, or multiply two numbers and folded on the third. Thus, peak performance in doubles is about five times lower than in Float. For senior Radeon models, it corresponds to the performance of NVIDIA TESLA on the new Fermi architecture and much higher than the performance in the Double cards on the GT200 architecture. In the Consumer GEFORCE video cards based on Fermi, the maximum speed of double-computing was reduced four times.

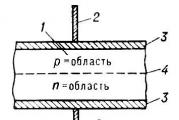

Radeon work concept. Only one miniprocessor of 20 parallel working

GPU manufacturers, unlike CPU manufacturers (first of all, X86-compatible) are not related to compatibility issues. The GPU program is first compiled into a certain intermediate code, and when the driver starts, the driver compiles this code into machine instructions specific to a specific model. As described above, the GPU manufacturers took advantage of this, inventing convenient ISA (Instruction Set Architecture) for its GPU and changing them from generation to generation. This in any case added some percentage of performance due to lack (as unnecessary) decoder. But AMD went even further by inventing its own format of the location of instructions in the machine code. They are located not consistently (according to the listing of the program), and by sections.

First, the sectional transition instructions are followed, which have references to the units of continuous arithmetic instructions, corresponding to various branches of transitions. They are called VLIW Bundles (VLIW-instruction ligaments). These sections contain only arithmetic instructions with data from registers or local memory. Such an organization simplifies controlling the flow of instructions and delivery to the executive devices. This is all the more useful, given that VLIW instructions have a relatively large size. There are also sections for instructions of memory appeals.

| Section of conditional transition instructions | ||||

|---|---|---|---|---|

| Section 0. | Branch 0. | Link to section 3 of continuous arithmetic instructions | ||

| Section 1. | Branch 1. | Link to section number 4 | ||

| Section 2. | Branch 2. | Link to section No. 5 | ||

| Sections of continuous arithmetic instructions | ||||

| Section 3. | VLIW instruction 0 | VLIW instruction 1 | VLIW instruction 2 | VLIW instruction 3 |

| Section 4. | VLIW instruction 4 | VLIW instruction 5 | ||

| Section 5. | VLIW instruction 6 | VLIW instruction 7 | VLIW instruction 8 | VLIW instruction 9 |

GPU both manufacturers (and NVIDIA, and AMD) also have built-in quick-calculation instructions for several basic mathematical functions, square roots, exhibitors, logarithms, sines and cosiners for single accuracy numbers. For this there are special computing blocks. They "occurred" from the need to implement the rapid approximation of these functions in geometric shaiders.

If even someone did not know that the GPU was used for the graphics, and got acquainted only with the technical characteristics, then on this basis he could guess that these computing coprocessors occurred from video sources. Similarly, according to some rates of marine mammals, scientists realized that their ancestors were ground beings.

But a more obvious feature that issues the graphic origin of the device, these are the blocks of reading two-dimensional and three-dimensional textures with support for bilinear interpolation. They are widely used in GPU programs, as they provide an accelerated and simplified reading of DAAD-only data arrays. One of the standard behavior of the GPU application is reading the source data arrays, processing them in computing kernels and record the result in another array, which is transmitted further back to the CPU. Such a scheme is standard and distributed, because it is convenient for the GPU architecture. Tasks requiring intensively read and write in one large area of \u200b\u200bglobal memory containing, thus, depending on the data, it is difficult to parallerate and effectively implement on the GPU. Also, their performance will be highly dependent on the latency of global memory, which is very large. But if the task is described by the "Data Reading - Processing - Recording - Record" template, then almost certainly you can get a big increase from its execution on the GPU.

For textural data in GPU there is a separate hierarchy of small caches of the first and second levels. It provides acceleration from the use of textures. This hierarchy originally appeared in the graphics processors in order to use the locality of access to textures: Obviously, after processing one pixel for a neighboring pixel (with a high probability), you will need closely located texture data. But many algorithms for ordinary calculations have a similar nature of access to data. So texture caches from the graphics will be very helpful.

Although the size of the L1-L2 caches in NVIDIA and AMD cards roughly similar, which is obviously caused by the optimal requirements from the point of view of game graphics, the latency of access to these caches varies significantly. NVIDIA access latency has more, and textural caches in GeForce primarily help reduce the load on the memory bus, and not directly speed up access to the data. It is not noticeable in graphic programs, but it is important for general-purpose programs. In the Radeon, the latency of the textural cache below, but above the latency of the local memory of miniprocessors. This example can be given: for optimal multiplication of matrices on NVIDIA cards, it is better to use local memory, loading the matrix in blocks into the AMD, and it is better to rely on a low-definite texture cache, reading the elements of the matrix as needed. But it is already quite fine optimization, and for the algorithm already translated on the GPU.

This difference is also manifested in the case of using 3D textures. One of the first benchmarks of computing on the GPU, which showed a serious advantage of AMD, just used 3D textures, as it worked with a three-dimensional data array. And the latency of access to textures in Radeon is significantly faster, and the 3D-case is further optimized in the gland.

To obtain maximum performance from iron from various firms, a certain tuning of the application under a specific card is needed, but it is an order of magnitude less significant than in principle the development of an algorithm for the GPU architecture.

Restrictions of the Radeon 47xx series

In this family, support for computing on the GPU is incomplete. It can be noted three important points. First, there is no local memory, that is, it is physically there, but does not have the ability to universal access required by the modern GPU program standard. It is emulated on global memory, that is, its use in contrast to a full-featured GPU will not benefit. The second point is the limited support for various instructions for atomic operations with memory and synchronization instructions. And the third point is a pretty small size of the instructions cache: starting from a certain size of the program, the speed is slowed down. There are other minor restrictions. You can say only perfectly suitable for GPU programs will work well on this video card. Let in simple test programs that operate only with registers, the video card can show a good result in GigaFlops, something complicated effectively programmed under it problematic.

Advantages and disadvantages of Evergreen

If you compare AMD and NVIDIA products, then, from the point of view of calculations on the GPU, the 5xxx series looks like a very powerful GT200. Such a powerful thing on peak performance exceeds Fermi about two and a half times. Especially after the parameters of the new NVIDIA video cards were trimmed, the number of cores is reduced. But the appearance in Fermi cache L2 simplifies the implementation of some algorithms on the GPU, thus expanding the GPU scope. What is interesting, for well-optimized under the past generation GT200 CUDA programs, Fermi architectural innovations often did nothing. They accelerated in proportion to an increase in the number of computing modules, that is, less than twice (for numbers of single accuracy), and even less, because the memory psp did not increase (or for other reasons).

And in the tasks that have pronounced on the architecture of GPUs with a pronounced vector nature (for example, multiplication of matrices), Radeon shows a relatively close to theoretical peak performance and overtakes Fermi. Not to mention the multi-core CPU. Especially in tasks with numbers with single accuracy.

But Radeon has a smaller crystal area, less heat generation, power consumption, greater yield of suitable and, accordingly, less cost. And directly in the tasks of 3D graphics winning Fermi, if it is in general there is much less difference in the crystal area. This is largely due to the fact that the Radeon computational architecture with 16 computing devices per miniprocessor, the size of WAVE FRONT in 64 yarn and vector VLIW instructions is beautiful for its main task - calculating graphic shaders. For the absolute majority of ordinary users, productivity in games and the price are priority.

From the point of view of professional, scientific programs, the Radeon architecture provides the best price-performance ratio, performance on Watt and absolute performance in tasks, which in principle fit the GPU architecture, allow parallelization and vectorization.

For example, in a fully parallel easily vectorized task of the Radeon key selection several times faster than GeForce and several dozen times faster than the CPU.

This corresponds to the general AMD Fusion concept, according to which the GPU should complement the CPU, and in the future integrated into the CPU kernel itself, as a mathematical coprocessor has been moved from a separate crystal to the processor core (this happened twenty years ago before the first Pentium processors). GPU will be an integrated graphics core and a vector coprocessor for streaming tasks.

Radeon uses the tricky technique for mixing instructions from various Wave Front when performing functional modules. It is easy to do, as the instructions are completely independent. The principle is similar to the conveyor execution of independent instructions with modern CPUs. Apparently, it makes it possible to effectively fulfill complex, occupying a lot of bytes, vector VLIW instructions. In the CPU, this requires a complex scheduler to identify independent instructions or using Hyper-Threading technology, which also supplies CPU with deliberately independent instructions from various streams.

| tact 0. | tact 1. | tact 2. | tut 3. | tut 4. | tut 5. | tut 6. | tut 7. | VLIW module | |

| wave Front 0 | wave Front 1. | wave Front 0 | wave Front 1. | wave Front 0 | wave Front 1. | wave Front 0 | wave Front 1. | ||

|---|---|---|---|---|---|---|---|---|---|

| → | instrase 0. | instrase 0. | instrase sixteen | instrase sixteen | instrase 32. | instrase 32. | instrase 48. | instrase 48. | Vliw0. |

| → | instrase one | … | … | … | … | … | … | … | Vliw1 |

| → | instrase 2. | … | … | … | … | … | … | … | VLIW2. |

| → | instrase 3. | … | … | … | … | … | … | … | VLIW3. |

| → | instrase four | … | … | … | … | … | … | … | VLIW4. |

| → | instrase five | … | … | … | … | … | … | … | VLIW5 |

| → | instrase 6. | … | … | … | … | … | … | … | VLIW6. |

| → | instrase 7. | … | … | … | … | … | … | … | VLIW7. |

| → | instrase eight | … | … | … | … | … | … | … | VLIW8. |

| → | instrase nine | … | … | … | … | … | … | … | VLIW9. |

| → | instrase 10 | … | … | … | … | … | … | … | VLIW10 |

| → | instrase eleven | … | … | … | … | … | … | … | VLIW11 |

| → | instrase 12 | … | … | … | … | … | … | … | VLIW12. |

| → | instrase 13 | … | … | … | … | … | … | … | VLIW13 |

| → | instrase fourteen | … | … | … | … | … | … | … | VLIW14 |

| → | instrase fifteen | … | … | … | … | … | … | … | VLIW15 |

128 instructions of two WAVE FRONT, each of which consists of 64 operations, are executed by 16 VLIW modules for eight clocks. There is an alternation, and each module in reality has two clocks on the implementation of the whole instruction, provided that it starts to perform a new one on the second tact. It is likely that it helps to quickly perform VLIW instructions like A1 × A2 + B1 × B2 + C1 × C2 + D1 × D2, that is, to fulfill eight instructions for eight clocks. (Formally it turns out, one for the tact.)

In Nvidia, apparently, there is no such technology. And in the absence of VLIW, for high performance using scalar instructions, a high frequency of operation is required, which automatically increases heat dissipation and places high demands on the technological process (in order to force the scheme at a higher frequency).

The lack of Radeon from the point of view of GPU-calculations is a large dislike for branching. GPU generally do not complain branching due to the above-described instruction execution technology: immediately a group of threads with one software address. (By the way, this technique is called SIMT: Single Instructions - MultiPle Threads (one instruction - many threads), by analogy with SIMD, where one instruction performs one operation with various data.) However, the Radeon branching does not like it especially: it is caused by a large filament ligament size . It is clear that if the program is not fully vector, the greater the greater size of the Warp or Wave Front, the worse, since when it discrepanses, more groups that need to be executed sequentially (serialized) are formed in the path. Suppose all the threads disperse, then in the case of Warp size in 32 threads, the program will work 32 times slower. And in the case of size 64, as in Radeon, - 64 times slower.

This is noticeable, but not the only manifestation of "hostility". In NVIDIA video cards, each functional module, otherwise called CUDA CORE, has a special branch processing unit. And in Radeon video cards on 16 computing modules - only two branch control units (they are derived from the domain of arithmetic blocks). So even simple processing of the conditional transition instruction, let its result and the same for all threads in Wave Front, takes extra time. And speed sarses.

AMD also produces CPUs. They believe that for programs with a large amount of branches, the CPU is still better suited, and the GPU is designed for pure vector programs.

So Radeon provides in general less opportunities for efficient programming, but provides better productivity ratio in many cases. In other words, programs that can be effective (with benefit) translate from CPU to Radeon, less than programs that work effectively on FERMI. But those that can be effectively transferred will work on Radeon more efficiently in many meanings.

API for GPU computing

The Radeon Technical Specifications themselves look attractive, even if you should not idealize and absolutize the calculations on the GPU. But equally important for performance software required to develop and execute the GPU program - compilers from a high level and run-time language, that is, a driver that interacts between the program running on the CPU and directly the GPU. It is even more important than in the case of the CPU: for the CPU, you do not need a driver that will carry out data transfer management, and from the point of view of the GPU compiler more arrogantly. For example, the compiler must do with the minimum number of registers for storing intermediate calculation results, as well as gently embed the calls of functions, again using a minimum of registers. After all, the fewer the registers use the thread, the more threads can be launched and the more fully load the GPU, it is better to hide memory access time.

And here's software support for Radeon products while lagging behind the development of iron. (Unlike the situation with NVIDIA, where iron intake was postponed, and the product was released in a trimmed form.) More recently, the OpenCL-compiler manufactured by AMD had beta status, with a multitude of flaws. He too often generated an erroneous code or refused to compile code from the correct source text, or he himself issued a work error and depended. Only at the end of the spring came out a release with high performance. It is also not devoid of errors, but they have become much smaller, and they, as a rule, arise on the side directions, when they try to program something on the verge of correctness. For example, work with the type of UChar4, which sets the 4-byte four-component variable. This type is in the OpenCL specifications, but it is not worth working with it on Radeon, because the registers are 128-bit: the same four components, but 32-bit. And such a variable Uchar4 will still take a whole register, only additional packaging operations will be required and access to individual byte components. The compiler should not have any errors, but there are no compilers without flaws. Even Intel Compiler after 11 versions has compilation errors. The identified errors are fixed in the next release, which will come closer to autumn.

But there are still many things requiring refinement. For example, still standard GPU driver for Radeon does not have the support of GPU computing using OpenCL. The user must upload and install an additional special package.

But the most important thing is the lack of any functions libraries. For real numbers of double accuracy, there is not even sinus, cosine and exhibitors. Well, for addition, the multiplication of the matrices is not required, but if you want to program something more complicated, you need to write all the functions from scratch. Or wait for the new SDK release. Soon the ACML (AMD Core Math Library) should be released for the Evergreen GPU family with support for the main matrix functions.

At the moment, according to the author's author, the Radeon video card programming seems to use the Direct Compute 5.0 API, naturally considering the restrictions: orientation to the Windows 7 and Windows Vista platform. Microsoft has a lot of experience in creating compilers, and you can expect a fully efficient release very soon, Microsoft is interested in this. But Direct Compute is focused on the needs of interactive applications: to calculate something and immediately visualize the result - for example, the flow of fluid on the surface. This does not mean that it cannot be used simply for the calculations, but it is not his natural purpose. For example, Microsoft does not plan to add library functions to Direct Compute - just those that are currently not at AMD. That is, what can now be effectively calculated on Radeon - some are not too sophisticated programs, it can be implemented on the Direct Compute, which is much easier to OpenCL and should be more stable. Plus, it is fully portable, will work on NVIDIA, and on AMD, so it will have to compile the program only once, while the implementation of OpenCL SDK companies NVIDIA and AMD are not entirely compatible. (In the sense that if you develop an OpenCL program on an AMD system using AMD OpenCL SDK, it may not go so easy to NVIDIA. You may need to compile the same text using NVIDIA SDK. And, of course, on the contrary.)

Then, in OpenCL a lot of redundant functionality, since OpenCL is conceived as a universal programming language and API for a wide circle of systems. And GPU, and CPU, and Cell. So in case you just need to write a program for a typical user system (processor plus a video card), OpenCL does not appear, so to speak, "highly productive". Each function has ten parameters, and nine of them must be set to 0. And in order to set each parameter, it is necessary to call a special function that also has parameters.

And the most important current plus Direct Compute - the user does not need to install a special package: everything you need already exists in DirectX 11.

Problems of development of GPU-calculations

If you take the sphere of personal computers, the situation is as follows: There are not so many tasks for which a large computing power is required and there is not enough of the usual dual-core processor. As if from the sea, a large voracious, but huge monsters got out of the sea, and there is almost nothing to do with land. And the primordial monastery of the earth's surface decrease in size, they learn less to consume less, as always with a shortage of natural resources. If there was now the same need for performance, as 10-15 years ago, GPU-computing would take on a bang. And so the compatibility problems and the relative complexity of GPU programming are on the fore. It is better to write a program that would work on all systems than a program that works quickly, but it starts only on the GPU.

Some better GPU prospects in terms of use in professional applications and workstation sectors, as there is more performance requirements. Plugins for 3D editors with GPU support are appear: for example, for rendering using rays trace - not to be confused with the usual GPU-rendering! Something appears for 2D editors and presentation editors, with the acceleration of creating complex effects. Video processing programs are also gradually acquiring GPU support. The above tasks in mind their parallel entity are good at the GPU architecture, but now a very large code of code is established, which is optimized for all CPU features, so that you need the time to appear good GPU implementations.

In this segment, the weaknesses of the GPU are also manifested, as a limited amount of video memory - about 1 GB for conventional GPUs. One of the main factors that reduce the performance of GPU programs is the need to exchange data between CPU and GPU on a slow bus, and due to limited memory, you have to transmit more data. And then the AMD concept on the combination of GPU and CPU in one module is promising: you can sacrifice the high bandwidth of graphics memory for the sake of light and simple access to the overall memory, moreover with less latency. This high PSP of the current DDR5 video memory is much more in demand directly by graphic programs than most GPU-computing programs. In general, the general GPU and CPU memory will simply expand the scope of the GPU, will make it possible to use its computational capabilities in small subtasks of programs.

And the most GPU is in demand in the field of scientific computing. Multiple supercomputers based on GPU, which show a very high result in the test of matrix operations. Scientific tasks are so diverse and numerous that there is always a set that perfectly falls on the GPU architecture for which the use of GPU makes it easy to get high performance.

If you choose one among all the tasks of modern computers, then it will be a computer graphics - the image of the world in which we live. And the optimal architecture for this purpose can not be bad. It is so important and fundamental task that iron specially designed for her must carry versatility and be optimal for various tasks. Especially since the video cards successfully evolve.

Today, many users are especially actively being discussed and many users are interested, where to start mining coins and how it does. The popularity of this industry has already had a tangible impact on the market of graphics processors and a powerful video card for many have long been associated not with demanding games, but with cryptophers. In this article we will tell you how to organize this whole process from scratch and start a mate on your own farm, which is used for this and why it is impossible.

What is mining on the video card

The mining on the video card is the process of mining cryptocurrency using graphics processors (GPU). To do this, use a powerful video card in a home computer or a specially assembled farm from several devices in one system. If you are interested in why GPUs are used for this process, the answer is very simple. The thing is that video cards are initially developed for processing a large amount of data by means of the same type of operations, as in the case of video processing. The same picture is observed in the mining cryptocurrency, because the process of hashing is just as the same.

For mining, full-fledged discrete video cards are used. Laptops or integrated chips are not used. The network also meets articles about mining on an external video card, but it also does not work in all cases and is not the best solution.

What video cards are suitable for mining

So, with regard to the choice of a video card, here the usual practice is the acquisition of AMD Rx 470, Rx 480, Rx 570, Rx 580 or Nvidia 1060, 1070, 1080 Ti. Also suitable, but will not bring big profits, video cards of type R9 280x, R9 290, 1050, 1060. It will not exactly bring the mining profit on a weak video card like GeForce GTX 460, GTS 450, GTX 550Ti. If we talk about memory, then take it better from 2 GB. It may not be enough even 1 GB, not to mention 512 MB. If we talk about mining on a professional video card, it brings something about as much as the usual or even less. Taking into account the cost of such VCs is unprofitable, but you can get it possible if you already have in stock.

It is also worth noting that all video cards can get productivity growth due to the unlocking of the values \u200b\u200bthat laid the manufacturer. This process is called overclocking. However, it is unsafe, leads to a loss of warranty and the card can fail, for example, starting to show artifacts. You can accelerate the video cards, but you need to get acquainted with the materials on this topic and act with caution. You should not try to immediately install all the values \u200b\u200bat the maximum, and even better to find examples of successful overclocking settings for your video card.

Most Popular Video Cards for Mining 2020

Below is a comparison of video cards. The table contains the most popular devices and their maximum power consumption. It must be said that these indicators may vary depending on the specific video card model, its manufacturer, memory used and some other characteristics. Writing about outdated indicators, such as Lightcoin's mining on a video card, it makes no sense, so only the three most popular farms algorithms are considered on video cards.

| Video card | Ethash. | Equihash. | Cryptonight | Energy consumption |

|---|---|---|---|---|

| AMD RADEON R9 280X | 11 mh / s | 290 H / S | 490 H / S | 230W. |

| AMD RADEON RX 470 | 26 MH / S | 260 H / S | 660 H / S | 120w. |

| AMD RADEON RX 480 | 29.5 MH / S | 290 H / S | 730 H / S | 135W. |

| AMD RADEON RX 570 | 27.9 MH / S | 260 H / S | 700 H / S | 120w. |

| AMD RADEON RX 580 | 30.2 MH / S | 290 H / S | 690 H / S | 135W. |

| NVIDIA GeForce GTX 750 Ti | 0.5 MH / S | 75 H / S | 250 H / S | 55w |

| NVIDIA GeForce GTX 1050 Ti | 13.9 MH / S | 180 H / S | 300 H / S | 75W |

| NVIDIA GeForce GTX 1060 | 22.5 MH / S | 270 H / S | 430 H / S | 90w. |

| NVIDIA GeForce GTX 1070 | 30 MH / S | 430 H / S | 630 H / S | 120w. |

| NVIDIA GeForce GTX 1070 Ti | 30.5 MH / S | 470 H / S | 630 H / S | 135W. |

| NVIDIA GeForce GTX 1080 | 23.3 MH / S | 550 H / S | 580 H / S | 140w. |

| NVIDIA GeForce GTX 1080 Ti | 35 MH / S | 685 H / S | 830 H / S | 190w. |

Is mining possible on one video card possible?

If you do not have a desire to collect a full-fledged farm from a plurality of GPU or you just want to try out this process on a home computer, then you can mate and one video card. There are no differences and in general the number of devices in the system is not important. Moreover, you can install devices with different chips or even from different manufacturers. It will only be necessary to run in parallel two programs for chips of different companies. Recall once again that the mining on an integrated video card is not produced.

What cryptocurrencies can be mandate on video cards

Maja on the GPU can be any cryptocurrency, but it should be understood that the performance on different will differ on the same card. Older algorithms are already poorly suitable for video processors and will not bring any profits. This is due to the emergence of new devices in the market - the so-called. They are much more productive and significantly increase the complexity of the network, but their cost is high and is calculated by thousands of dollars. Therefore, mining coins on SHA-256 (Bitcoin) or SCRYPT (Litecoin, DOGECOIN) at home is a bad idea in 2018.

In addition to LTC and DOGE, ASICs made it impossible to prey Bitcoin (BTC), Dash and other currencies. The best choice will be cryptocurrencies that use algorithms protected from ASICs. For example, using the GPU will be able to produce coins on Cryptonight algorithms (Carbovanese, Monero, Electroneum, Bytecoin), Equihash (ZCash, Hush, Bitcoin Gold) and Ethash (Ethereum, Ethereum Classic). The list is far from complete and constantly appear new projects on these algorithms. Among them are found both forces of more popular coins and completely new developments. Occasionally, new algorithms appear, which are designed to solve certain tasks and can use various equipment. Below will be talking about how to learn the hashraite video cards.

What you need for mining on the video card

Below is a list of what you will need to create a farm:

- Video cards themselves. The choice of specific models depends on your budget or what is already available. Of course, old devices on AGP will not fit, but you can use any card of the middle or top class of recent years. Above you can return to the video card performance table, which will make the appropriate choice.

- Computer for installation. It is not necessary to use the top iron and make a farm based on high-performance components. It will be enough for some old AMD Athlon, several gigabytes of RAM and hard disk to install the operating system and the necessary programs. The motherboard is also important. It must have a sufficient number of PCI slots for your farm. There are special versions for miners that contain 6-8 slots and in certain cases it is more profitable to use them than to collect several PCs. Particular attention should be paid only to the power supply, because the system will operate under high load around the clock. It is necessary to take the power supply to the power supply and preferably the presence of 80 PLUS certificates. It is also possible to connect two blocks to one with the help of special adapters, but such a solution causes disputes on the Internet. The case is better not to use at all. For better cooling, it is recommended to do or buy a special stand. The video cards in this case are made with the help of special adapters that are called risers. You can buy them in profile stores or on Aliexpress.

- Well ventilated dry room. Place a farm stands in a non-residential room, and better in general in a separate room. This will allow to get rid of discomfort, which arises due to the noisy operation of cooling systems and heat transfer. If there is no such possibility, you should select video cards with the maximum quiet cooling system. You can learn more about it from reviews on the Internet, for example, on YouTube. You should also think about the circulation of air and ventilating to reduce the temperature.

- Mainer program. GPU mining takes place with a special, which can be found on the Internet. For manufacturers of ATI Radeon and NVIDIA, a different software is used. The same applies to different algorithms.

- Equipment service. This is a very important point, since not everyone understands that the mining farm requires constant care. The user needs to monitor the temperature, change the thermal and cleaned with dust. It should also be remembered about the safety technique and regularly check the serviceability of the system.

How to configure mining on a video card from scratch

In this section, we will consider the entire process of mining from the choice of currency before the withdrawal of funds. It should be noted that the whole process can be somewhat different for various pools, programs and chips.

How to choose a video card for mining

We recommend that you familiarize yourself with the table, which is presented above and with the section on calculating potential earnings. This will allow you to calculate approximate income and determine what iron you are more for your pocket, as well as understand the payback periods of investments. You should also not forget the compatibility of the video card power connections and the power supply. If different are used, then the corresponding adapters should be bought in advance. All this is easily bought in Chinese online stores for a penny or from local vendors with some mark.

Choose cryptocompany

It is now important to determine what coin you are interested in and what purposes you want to achieve. If you are interested in earnings in real time, then it is worth choosing currencies with the greatest profit at the moment and sell them immediately after receiving. You can also be the most popular coins and keep them until the price is happening. There is also a kind, strategic approach, when a little-known, but promising in your view of the currency and you put the power to it, in the hope that in the future the cost will increase significantly.

Choose a pool for mining

Also have some differences. Some of them require registration, and some sufficient only addresses of your wallet to start work. The first usually store the money you earned before reaching the minimum amount to pay the amount, or waiting for the output of money in manual mode. A good example of such a pool is suprnova.cc. There are proposed many cryptocurrency and to work in each of the pools just once again register on the site. The service is easy to configure and good for beginners.

A similar simplified system offers the MINERGATE website. Well, if you do not want to register on some site and store the funds earned there, then you should choose some pool in the official theme of the coins of interest to the Bitcointalk forum. Simple pools require only to specify the address to accrual the cryptis and in the future using the address it will be possible to recognize production statistics.

Create a cryptocurrency wallet

This item is not needed to you if you use a pool that requires registration and has a built-in wallet. If you want to receive payments automatically to your wallet, then try to read about creating a wallet in an article about the appropriate coin. This process can differ significantly for different projects.

You can also simply indicate the address of your wallet to some of the stock exchange, but it should be noted that not all exchange platforms take transactions from the pools. The best option will be creating a wallet directly on your computer, but if you work with a lot of currencies, the storage of all blockchas will be inconvenient. In this case, it is worth looking for reliable online wallets, or lightweight versions that do not require downloading the entire block circuit.

Choose and install the mining program

The selection of a program for mining of crypt depends on the selected coin and its algorithm. Probably, all developers of such software have the topics on Bitcointalks, where you can find download links and information about how to configure and launch. Almost all of these programs have versions for both Windows and Linux. Most of these miners are free, but some percentage they are used to connect to the developer pool. This is a kind of commission for using software. In some cases, it can be turned off, but this leads to the limitations of the functionality.

The program setting is that you specify a pool for mining, a wallet address or login, password (if any) and other options. It is recommended, for example, to set the maximum temperature limit, when the farm is reached, turns off to not harm video cards. Adjustable the speed of the cooling system fans and other thinner settings, which are unlikely to be used newcomers.

If you do not know what to select, look at our material dedicated to either learning the instructions on the pool site. Usually there is always a section that is devoted to the start of work. It contains a list of programs that can be used and configurations for .bat. files. With it, you can quickly deal with the setting and start the mining on the discrete video card. You can immediately create a batch file for all currencies with which you want to work so that in the future it is more convenient to switch between them.

We launch mining and watch statistics

After launch .bat. The file with the settings you will see a cantilever window where the log of what is happening will be displayed. It can also be found in the folder with the started file. In the console you can familiarize yourself with the current Hesrayite Indicator and the Map Temtery. Call current data usually allow hot keys.

You can also see if the device does not find hashi. In this case, a warning will be displayed. It happens when something is configured incorrectly, selected inappropriate software or GPU is properly functioning for the coin. Many miners also use tools for remote access to the PC to monitor the work of the farm when they are not where it is installed.

Take the cryptocurrent

If you use the pools like SUPRNOVA, then all the tools simply accumulate on your account and you can output them at any time. The remaining pools most often use the system when funds are credited automatically to the specified wallet after receiving the minimum output amount. To learn how much you earned, you can usually on the pool site. It is only required to specify the address of your wallet or log in to your personal account.

How much can you earn?

The amount you can earn depends on the market situation and, of course, the overall hashier of your farm. It is also important what strategy you select. Optionally selling everything mined immediately. You can, for example, wait for a hint of a namine coin and get more profitably. However, everything is not so unambiguous and to predict the further development of events is simply unrealistic.

Playback of video cards

Calculate payback will help a special online calculator. On the Internet there are many of them, but we will consider this process on the example of whattomine service. It allows you to receive data about the current profit, based on your farm data. You only need to select video cards that you have in stock, and then add the cost of electricity in your region. The site will consider how much you can earn per day.

It should be understood that only the current state of affairs on the market is taken into account and the situation may change at any time. The course may fall or climb, the complexity of mining will be another or new projects will appear. For example, the production of ether may cease due to the possible transition to the network. If you stop the Etheric mining, then the farms need to be able to send free power, for example, in the ZCASH mining on the GPU, which will affect the course of this coin. There are many similar scenarios in the market and it is important to understand that today's picture may not be preserved throughout the entire payback period of the equipment.

Using GPU for calculations using C ++ AMP

Until now, in the discussion of parallel programming techniques, we only considered the processor cores. We have acquired some skills of parallelizing programs on multiple processors, synchronize access to shared resources and using high-speed synchronization primitives without the use of locks.

However, there is another way to parallelize programs - graphic Processors (GPU)Holding a large number of cores than even high-performance processors. The cores of graphic processors are perfectly suitable for the implementation of parallel data processing algorithms, and their large amounts with more than the inconvenience of programs on them. In this article we will get acquainted with one of the ways of implementing programs on the graphics processor, using the C ++ language extension set called C ++ Amp.

C ++ AMP extensions are based on C ++ languages \u200b\u200band that is why examples in C ++ language will be displayed in this article. However, with moderate use of the interaction mechanism. NET, you can use C ++ AMP algorithms in your program for .NET. But we will talk about this at the end of the article.

Introduction to C ++ AMP

In essence, the graphics processor is the same processor as any other, but with a special set of instructions, a large number of cores and its memory access protocol. However, there are great differences between modern graphic and ordinary processors, and their understanding is the key to creating programs that effectively use the computing power of the graphics processor.

Modern graphics processors have a very small set of instructions. This implies some limitations: the absence of the function of calling functions, a limited set of supported data types, no library functions and others. Some operations such as conditional transitions can cost significantly more than similar operations performed on conventional processors. It is obvious that the transfer of large amounts of code from the processor to the graphics processor under such conditions requires significant efforts.

The number of nuclei in the average graphic processor is much larger than an average of a regular processor. However, some tasks are too small or do not allow you to break ourselves on a sufficiently large number of parts so that you can benefit from the application of the graphics processor.

Support for synchronization between the graphics processor cores performing one task, very scarce, and is completely absent between the cores of the graphics processor performing different tasks. This circumstance requires synchronization of a graphics processor with a conventional processor.

Immediately the question arises, what tasks are suitable for solving on a graphics processor? Keep in mind that not any algorithm is suitable for execution on the graphics processor. For example, graphic processors do not have access to I / O devices, so you will not be able to improve the performance of the program that extracts RSS tape from the Internet, by using a graphics processor. However, many computing algorithms can be transferred to the graphics processor and provide mass parallelization. Below are several examples of such algorithms (this list is not full):

an increase and decrease in sharpness of images, and other transformations;

fast Fourier transformation;

transposition and multiplication of matrices;

sorting numbers;

inversion of the hash "in the forehead".

An excellent source of additional examples is the Microsoft Native Concurrency blog, where the code fragments and explanations for them are given for various algorithms implemented on C ++ AMP.

C ++ AMP is a framework, which is part of Visual Studio 2012, giving developers to C ++ a simple way to perform calculations on a graphics processor and requires only the availability of Driverx drivers 11. Microsoft has released C ++ AMP as an open specification that any manufacturer of compilers can implement.

C ++ AMP framework allows you to execute code on graphic Accelerators (Accelerators)which are computing devices. Using the DirectX driver 11, the C ++ AMP framework dynamically detects all accelerators. The C ++ AMP also includes an accelerator software emulator and an emulator based on a regular processor, WARP, which serves as a spare option in systems without a graphics processor or with a graphics processor, but in the absence of the DirectX drive driver 11, and uses several nuclei and the SIMD instructions.

And now we will proceed to the study of the algorithm, which can be easily parallel for execution on the graphics processor. The implementation below takes two vectors of the same length and calculates the experiment. It is difficult to imagine anything more straightforward:

Void vectoraddexppointwise (Float * First, Float * Second, Float * Result, Int Length) (for (int i \u003d 0; i< length; ++i) { result[i] = first[i] + exp(second[i]); } }

To parallery this algorithm on a regular processor, you need to break the range of iterations into several subbands and run one step to each of them. We have dedicated quite a lot of time in previous articles exactly such a way to parallelize our first example of finding simple numbers - we saw how you can do it, creating streams manually by passing jobs to the flow pool and using Parallel.For and PLINQ for automatic parallelization. Also remember that when paralleling similar algorithms on the usual processor, we especially cared to not split the task for too small tasks.

For the graphics processor, these warnings are not needed. Graphic processors have many kernels that perform the flows very quickly, and the cost of switching the context is significantly lower than in conventional processors. Below is a fragment trying to use the function parallel_For_Each. From the framework C ++ amp:

#Include.

Now we investigate each part of the code separately. Immediately, we note that the general form of the main cycle has been preserved, but the initially used FOR cycle was replaced by the PARALLEL_FOR_EACH function. In fact, the principle of converting a cycle to a call to a function or method for us is not new - such a reception with the application of Parallell.For () and Parallel.Foreach () from the TPL library has already been demonstrated.

Next, the input data (parameters first, second and result) are wrapped with instances array_View.. Array_View class is used to wrap the data transmitted by the graphical processor (accelerator). Its template parameter defines the data type and their dimension. To perform on the graphics processor instructions that appeal to the data originally processed on the usual processor, someone or something must take care of copying data into a graphics processor, because most modern graphics cards are separate devices with their own memory. ARRAY_VIEW instances are solved this task - they provide copying data on demand and only when they are really necessary.

When the graphics processor executes the task, the data is copied back. By creating an ARRAY_VIEW instance with the contest type argument, we guarantee that FIRST and SECOND will be copied to the graphics processor memory, but will not be copied back. Similarly, causing discard_data (), We exclude copying Result from the normal processor memory to the accelerator memory, but these data will be copied in the opposite direction.

The Parallel_For_each feature receives an extent object that defines the form of the processed data and the function for use to each element in the Extent object. In the example above, we used a lambda function, the support of which appeared in the ISO C ++ 2011 standard (C ++ 11). The RESTRICT (AMP) keyword entries the compiler to check the ability to perform the body function on the graphics processor and turns off most of the C ++ syntax, which cannot be compiled in the graphics processor instructions.

Lambda function parameter, index<1> The object represents a one-dimensional index. It must comply with the Extent object used - if we declared an Extent object with two-dimensional (for example, by defining the form of the source data in the form of a two-dimensional matrix), the index would also have to be two-dimensional. An example of such a situation is located just below.

Finally, call a method synchronize () At the end of the VECTORADDEXPOINTWISE method, it guarantees copying the results of computing from Array_View Avresult, produced by the graphics processor, back to the Result array.

On this, we finish our first acquaintance with the C ++ AMP world, and now we are ready for more detailed research, as well as to more interesting examples demonstrating the benefits of using parallel computing on the graphics processor. Addition of vectors - not the most successful algorithm and not the best candidate to demonstrate the use of the graphics processor due to large overhead of data copying. The next subsection will show two more interesting examples.

Matrix multiplication

The first "real" example, which we consider is the multiplication of matrices. To implement, we will take a simple cubic multiplication algorithm for matrices, and not the Strassen algorithm, having a execution time close to cubic ~ O (N 2.807). For two matrices: Matrix A size M x W and matrix B The size W x n, the following program will perform their multiplication and return the result - the matrix with the size M x n:

Void MatrixMultiply (int * a, int m, int w, int * b, int n, int * c) (for (int i \u003d 0; i< m; ++i) { for (int j = 0; j < n; ++j) { int sum = 0; for (int k = 0; k < w; ++k) { sum += A * B; } C = sum; } } }

You can select this implementation in several ways, and if you want to parallery this code, it would be possible to receive an external cycle parallelization on the usual processor. However, the graphics processor has a sufficiently large number of cores and parallelly only the external cycle, we will not be able to create a sufficient number of tasks to load all the kernels. Therefore, it makes sense to parallelate two external cycles, leaving the inner cycle intact:

Void matrixmultiply (int * a, int m, int w, int * b, int n, int * c) (Array_View

This implementation is still closely reminded by the consistent implementation of multiplication of matrices and an example of the addition of the vectors, which are above, with the exception of the index, which is now two-dimensional and is available in the internal cycle using the operator. How much is this version faster than a consistent alternative performed on the usual processor? Multiplying two matrices (integer numbers) of 1024 x 1024 The sequential version on the usual processor performs an average of 7350 milliseconds, while the version for the graphics processor - hold the harden - 50 milliseconds, 147 times faster!

Modeling of the movement of particles

Examples of solving problems on the graphics processor presented above have a very simple implementation of the internal cycle. It is clear that it will not always be. The Native Concurrency blog, the reference to which has already been given above, demonstrates an example of modeling gravitational interactions between particles. Simulation includes an infinite number of steps; At each step, the new values \u200b\u200bof the elements of the acceleration vector for each particle are calculated and then their new coordinates are determined. Here, the particle vector is subjected to parallelization - with a sufficiently large number of particles (from several thousand and higher), you can create a sufficiently large number of tasks to load all the kernels of the graphics processor.

The basis of the algorithm is the implementation of determining the result of interactions between two particles, as shown below, which can be easily transferred to the graphics processor:

// Here Float4 is vectors with four elements, // representing particles participating in operations Void Bodybody_interaction (Float4 & Acceleration, Const Float4 P1, Const Float4 P2) Restrict (AMP) (Float4 Dist \u003d P2 - P1; // W is not Float ABSDIST \u003d DIST.X * DIST.X + DIST.Y * DIST.Y + DIST.Z * DIST.Z; Float Invdist \u003d 1.0F / SQRT (ABSDIST); Float InvdistCube \u003d Invdist * INVDIST * INVDIST; Acceleration + \u003d DIST * Particle_mass * InvdistCube;)

The initial data at each modeling step is an array with coordinates and speeds of the particle movement, and as a result of the calculations, a new array is created with coordinates and particle rates:

STRUCT PARTICLE (FLOAT4 POSITION, VELOCITY; // Constructor implementation, copying constructor and // Operator \u003d with Restrict (AMP) omitted to save space); Void Simulation_Step (Array

With the attraction of the corresponding graphical interface, the simulation may be very interesting. A complete example of the C ++ AMP developer team can be found on the Native Concurrency blog. On my system with the Intel Core i7 processor and the GeForce GT 740m video card, the motion modeling of 10,000 particles is performed at a speed of ~ 2.5 frame per second (steps per second) using a serial version running on a regular processor, and 160 frames per second using optimized Versions running on a graphics processor - a huge increase in productivity.

Before completing this section, you need to tell another important feature of the C ++ AMP framework, which can even more increase the productivity of the code performed on the graphics processor. Graphic processors support programmable cache data (often called shared Memory). The values \u200b\u200bstored in this Kesche are shared by all the streams of execution in one mosaic (Tile). Thanks to the mosaic memory organization, the C ++ AMP framework based program can read data from the graphics card memory to the shared mosaic memory and then access them from several execution threads without re-extracting this data from the graphics card memory. Access to the shared memory of the mosaic is performed about 10 times faster than to the graphics card memory. In other words, you have reason to continue reading.

To ensure the execution of the mosaic version of the parallel cycle, the PARALLEL_FOR_EACH method is transmitted domain tiled_extentwhich divides the multidimensional extent object on multidimensional mosaic fragments, and lambda parameter Tiled_index, which defines the global and local flow identifier inside the mosaic. For example, a 16x16 matrix can be divided into 2x2 mosaic fragments (as shown in the figure below) and then transfer the ParlelLel_For_each functions:

Extent<2> Matrix (16,16); tiled_extent<2,2> TiledMatrix \u003d Matrix.tile.<2,2> (); Parallel_For_Each (TiledMatrix, [\u003d] (Tiled_index<2,2> IDX) Restrict (AMP) (// ...));

Each of the four streams of execution belonging to the same mosaic can share the data stored in the block.

When performing operations with matrices, in the core of the graphics processor, instead of the standard index index<2>As in the examples above, you can use idx.global. Competent use of local mosaic memory and local indexes can provide a significant increase in performance. To declare a mosaic memory, shared by all streams of execution in one mosaic, local variables can be declared with the Tile_Static specifier.

In practice, it is often used to accept the declaration of shared memory and initializing its individual blocks in different streams of execution:

Parallel_For_Each (TiledMatrix, [\u003d] (Tiled_index<2,2> IDX) RESTRICT (AMP) (// 32 bytes are shared by all threads in the Tile_Static int Local unit; // Assign the value to the element for this flow Local \u003d 42;));

Obviously, any benefits from the use of shared memory can only be obtained in case of synchronization of access to this memory; That is, streams should not handle the memory until it is initialized by one of them. Synchronization of streams in the mosaic is performed using objects tile_barrier (resembling a Barrier class from the TPL library) - they can continue executing only after calling the Tile_Barrier.wait () method, which will return control only when all the streams call tile_barrier.wait. For example:

Parallel_For_Each (TiledMatrix, (Tiled_index<2,2> IDX) RESTRICT (AMP) (// 32 bytes shared by all threads in the Tile_Static int Local unit; // Assign the value to the item for this flux of execution LOCAL \u003d 42; // IDX.Barrier - Instance Tile_Barrier IDX.Barrier.wait (); // Now this stream can access the "Local" array, // using the indexes of other runtime!));

Now is the time to embody the knowledge gained in a specific example. Let us return to the implementation of multiplication of matrices, made without the use of a mosaic memory organization, and add the described optimization into it. Suppose that the size of the Matthent number 256 is allowed to work with blocks of 16 x 16. The nature of the matrices allows for their short-term multiplication, and we can take advantage of this feature (in fact, the division of matrices to blocks is a typical optimization of the multiplication algorithm for matrices, providing more efficient Using a processor cache).

The essence of this reception is reduced to the following. To find C i, j (element in string I and in the result column in the result matrix), you need to calculate the scalar product between A I, * (I-I line of the first matrix) and B *, j (j-th column in the second matrix ). However, this is equivalent to calculating partial scalar pieces of string and column with subsequent summation of the results. We can use this circumstance to convert the multiplication algorithm to the mosaic version: